In recent days,ChatTTSIt's quite popular, claiming to be a text-to-speech model specially designed for conversation scenarios. I downloaded it to play with it, and found that the effect of the open source version is still far from that of the promotional video. It is said that the restrictions are intentional.

ChatTTS is a powerful text-to-speech system. However, it is important to use this technology responsibly and ethically. To limit the use of ChatTTS, we added a small amount of additional high-frequency noise during the 4w hour model training process and used mp3 format to degrade the sound quality as much as possible to prevent criminals from using it for potential crimes. At the same time, we trained the detection model internally and plan to open it in the future.

At least it can be used. Let's build a web interface and a lazy package first to make it easier to use. This article mainly includes three parts

1. Deploy ChatTTS from source code

2. Build the web interface

3. Translate in videoDubbing ToolsUse in

4. Open source address: https://github.com/jianchang512/chatTTS-ui

Source code deployment ChatTTS

- Assume that the code is to be stored in E:/python/chat, make sure the chat directory is empty, enter, enter cmd in the address bar, and then execute the command git clone https://github.com/2noise/ChatTTS . (The git client can be installed here https://github.com/git-for-windows/git/releases/download/v2.45.1.windows.1/Git-2.45.1-64-bit.exe )

- pip install -r requirements.txt

- For ease of use, install two additional modules pip install modelscope soundfile

- Download the model. By default, it is downloaded from huggingface.co. For well-known reasons, it cannot be downloaded without scientific Internet access. Use modescope instead.

Key Code

from modelscope import snapshot_download # Download to the models folder in the current directory and return to the local model directory CHATTTS_DIR = snapshot_download('pzc163/chatTTS',cache_dir="./models")

Then when loading_models, set the local source and source path

chat = ChatTTS.Chat() chat.load_models(source="local",local_path=CHATTTS_DIR)

- Test it out

import ChatTTS from modelscope import snapshot_download CHATTTS_DIR = snapshot_download('pzc163/chatTTS',cache_dir="./models") chat = ChatTTS.Chat() chat.load_models(source="local",local_path=CHATTTS_DIR) wavs = chat.infer(["Do you know I'm waiting for you? Do you really care about me?"], use_decoder=True)

wavs[0] is valid audio data. There is a pitfall here. The official IPython Audio example may not be able to play it. Therefore, use soundfile to save it locally and then play it.

sf.write('1.wav', wavs[0][0], 24000)

If nothing unexpected happens, you should be able to hear a relatively realistic human voice.

Build a web interface

Flask is the first choice for simple pages, and waitress is used for wsgi.

- First install pip install flask waitress

- Set static directory and template directory

app = Flask(__name__, static_folder='./static', static_url_path='/static', template_folder='./templates') @app.route('/static/ ') def static_files(filename): return send_from_directory(app.config['STATIC_FOLDER'], filename) @app.route('/') def index(): return render_template("index.html")

- Create an API interface to synthesize the received text into speech

# params # text: text to be synthesized # voice: timbre # prompt: @app.route('/tts', methods=['GET', 'POST']) def tts(): # original string text = request. args.get("text","").strip() or request.form.get("text","").strip() prompt = request.form.get("prompt",'') try: voice = int(request.form.get("voice",'2222')) except Exception: voice=2222 speed = 1.0 try: speed = float(request.form.get("speed",1)) except: pass if not text: return jsonify({"code": 1, "msg": "text params lost"}) texts = [text] std, mean = torch.load(f'{CHATTTS_DIR}/asset/spk_stat.pt').chunk(2) torch.manual_seed(voice) rand_spk = torch.randn(768) * std + mean wavs = chat.infer(texts, use_decoder= True,params_infer_code={'spk_emb': rand_spk} ,params_refine_text= {'prompt': prompt}) md5_hash = hashlib.md5() md5_hash.update(f"{text}-{voice}-{language}-{speed} -{prompt}".encode('utf-8')) datename=datetime.datetime.now().strftime('%Y%m%d-%H_%M_%S') filename = datename+'-'+md5_hash.hexdigest() + ".wav" sf.write(WAVS_DIR+'/'+filename, wavs[0][0], 24000) return jsonify({"code": 0, "msg": "ok","filename":WAVS_DIR+'/'+filename,"url":f"http://{WEB_ADDRESS}/static/wavs/{filename}"})

It should be noted that the sound acquisition

std, mean = torch.load(f'{CHATTTS_DIR}/asset/spk_stat.pt').chunk(2) torch.manual_seed(voice) rand_spk = torch.randn(768) * std + mean

Randomly select a timbre. Currently ChatTTS does not provide a friendly timbre selection interface.

- Start flask

from flask import Flask, request, render_template, jsonify, send_file, send_from_directoryfrom waitress import serve try: serve(app,host='127.0.0.1', port=9966) except Exception: pass

The front-end interface is implemented using bootstrap5, which is very simple and the code is omitted.

- Test it with python code

import requests res=requests.post('http://127.0.0.1:9966/tts',data={"text":"Do you know I'm waiting for you? Do you really care about me?","prompt":"","voice":"2222"}) print(res.json()) #ok {code:0,msg:'ok',filename:filename.wav,url:http://127.0.0.1:9966/static/wavs/filename.wav} #error {code:1,msg:"error"}

Used in video translation and dubbing

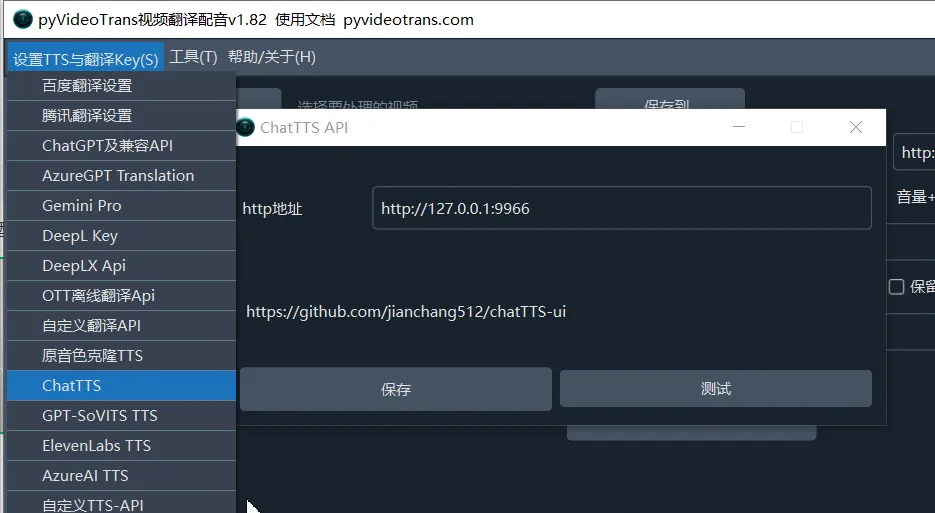

1. Use the Windows pre-packaged version or source code to deploy the ChatTTS UI project and start it. The open source address of the project is https://github.com/jianchang512/chatTTS-ui

2. Upgrade the video translation and dubbing software to version 1.82+, download address: https://pyvideotrans.com/downpackage.html

3. In the video translation and dubbing software, go to Menu-Settings-ChatTTS address bar and enter the http address. The default is http://127.0.0.1:9966

4. You can use it happily