With the rapid development of science and technology, artificial intelligence (AI) is no longer a distant future technology, but has gradually integrated into every corner of our lives. From the convenient control of smart homes, to the intelligent recommendation of online services, to the in-depth application in industries such as medical care and education, AI is changing the world with its unique charm. However, for many ordinary people, AI still seems to be a high-tech field full of mystery. So how can we, as ordinary people, try it out?AI TechnologyWoolen cloth?

When we talk about AI, I believe that many people will think of a name - ChatGPT. Yes, it is the chatbot that has caused a sensation in the technology circle. Its release is like a bright lightning, illuminating the night sky in the field of AI, and allowing us to truly feel the infinite possibilities of artificial intelligence.

ChatGPT is a chatbot that integrates cutting-edge natural language processing technology and deep learning capabilities. It is like a knowledgeable and versatile consultant who can provide detailed answers to your questions in any field. For content creators, ChatGPT is an indispensable assistant. It can generate high-quality pictures, write precise codes, conceive creative copywriting, make professional PPTs, and polish documents to make the content of the article more outstanding. Of course, these are just the tip of the iceberg of ChatGPT's functions. You can experience it yourself to understand its true strength.

From the perspective of use, ChatGPT is very simple to use. We only need to open the webpage and log in to the account to enjoy the relevant AI functions.

It is worth mentioning that in April this year, OpenAI announced that ChatGPT (version 3.5) is free. That is to say, if you just want to experience ChatGPT version 3.5, you don’t even need an account, just log in to the ChatGPT website.

If you are currently unable to use ChatGPT, many domestic manufacturers have launched products similar to ChatGPT, such as Baidu's Wenxin Yiyan. According to Baidu's official introduction, the comprehensive capabilities of Wenxin Big Model 4.0 are not inferior to GPT-4. The usage of Wenxin Yiyan is similar to ChatGPT. You only need to open the web page and log in to your Baidu account to enjoy the relevant AI functions. At present, Wenxin Big Model 3.5 is free and open, while Wenxin Big Model 4.0 requires a membership to use. In addition to Wenxin Yiyan, there are a series of other AI applications in China, such as Tongyi Qianwen, Doubao, Zhipu Qingyan, Tencent Hunyuan and iFlytek Spark, etc. These AI applications are similar in usage.

Of course, all of the above actually fall into the category of "cloud AI". In simple terms, "cloud AI" means putting AI-related data processing on the server, and users only need to enter instructions through web pages and applications. This deployment model has three advantages:

1. Cheap: Currently, the cost of cloud computing power is not too high, so many "cloud AI" are free and open, and even for paid membership, the price is not too expensive. Take Wenxin Yiyan VIP membership as an example, currently it only costs 49.9 yuan a month (continuous monthly subscription, which can be cancelled at any time).

2. No device selection: Since AI runs on the server, there is basically no performance requirement for the terminal used by the user. After all, it is easy to find a computer that can access the web normally.

3. Ease of use: Since the deployment, maintenance, and upgrade of "cloud AI" are the responsibility of the manufacturer, it is basically "ready to use" for users. They only need to open a web page and log in to their account to directly use the AI function.

Deploy AI locally on existing hardware

Although "cloud AI" has many advantages, there is still one problem that cannot be ignored, that is data privacy. Previously, Samsung was exposed to have leaked chip secrets due to ChatGPT. At that time, Samsung employees directly entered corporate confidential information into ChatGPT in the form of questions, causing the relevant content to enter the learning database, resulting in information leakage. For this situation, locally deploying a large model is actually a good solution.

Since local deployment of large models means running AI on local devices, relevant information will not be sent to other servers. Therefore, when we use this method to process personal privacy information or corporate confidential information, there is no need to worry about the risk of information leakage.

However, from another perspective, the computing power of local devices is often not as good as that of servers, so only some high-performance devices are suitable for local deployment of large models.

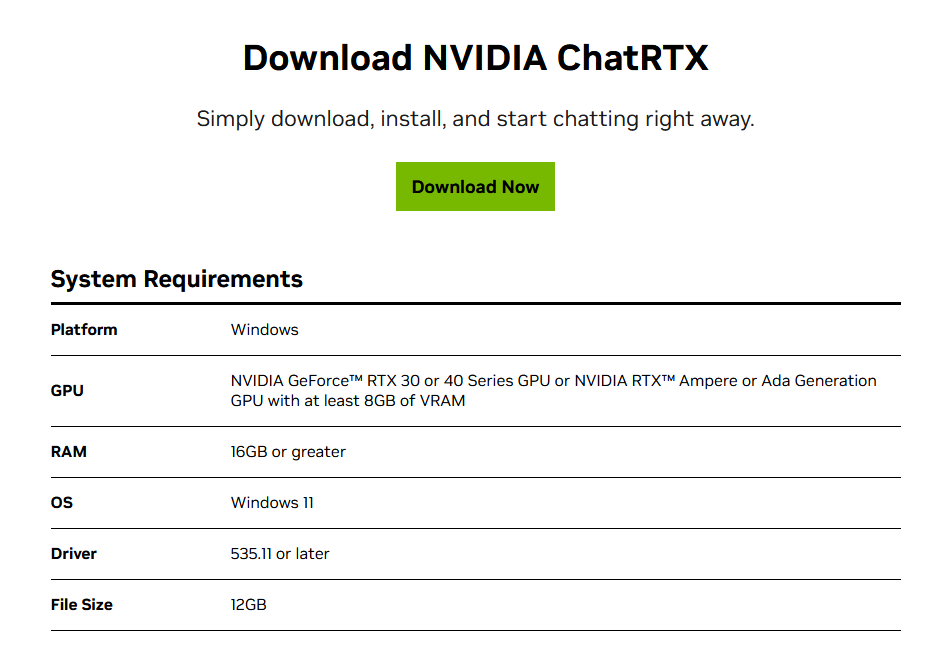

Take Chat With RTX released by NVIDIA as an example. Chat With RTX is an application based on a large language model that allows users to easily connect to their own content (documents, notes, videos or other data), using RAG (retrieval-augmented generation), TensorRT-LLM and RTX acceleration, all running locally on the user's personal Windows RTX PC or workstation to obtain fast and secure results.

There are two main requirements for hardware:

1. An RTX 30 or RTX 40 series graphics card with more than 8GB of video memory. Of course, professional graphics cards of the same specifications are also acceptable.

2. The computer memory exceeds 16GB.

For graphics cards, even the RTX 3050 released in 2022 can meet the relevant requirements. RTX 3050 can basically be regarded as the basic configuration of "gaming computers" in recent years, so many existing "gaming computers" can meet such configuration requirements.

If you want to deploy other large models locally, the hardware configuration requirements may be slightly higher. Simply put, the larger the model parameters, the more video memory is required. Assuming that the video memory size of the graphics card you use is 16GB, it should not be a problem to locally deploy large models with parameters below 7B.

To summarize briefly, this deployment mode has two advantages:

1. Data is calculated locally, so there is no need to worry about information leakage.

2. Most "gaming computers" purchased in recent years can basically run this game, and there is no need to purchase new equipment.

passAI PCDeploy large models locally

As early as September 2023, Intel CEO Pat Gelsinger proposed a revolutionary concept of PC in Silicon Valley - AI PC. Intel is also the first manufacturer to propose the concept of AI PC. In simple terms, AI PC is a PC that can play with AI functions.

Specifically, AI PCs integrate a central processing unit (CPU), a graphics processing unit (GPU), and a neural network processing unit (NPU), each of which has specific AI acceleration capabilities. NPU is a dedicated accelerator that can efficiently process artificial intelligence and machine learning (ML) tasks directly on the PC instead of sending data to the cloud for processing. Therefore, there is no need to worry about the risk of information leakage when running AI on an AI PC.

Compared with conventional gaming computers, AI PCs have shown significant advantages in terms of lightness and portability. This is mainly due to the fact that gaming computers usually need to be equipped with independent graphics cards, which are not only bulky, but also require large heat dissipation modules to cope with their heat dissipation needs, and thus require higher-power power supplies to ensure stable power supply. Therefore, gaming computers are often heavier in design and relatively less portable, while AI PCs can provide users with a lighter experience with their more compact design.

Therefore, for those who need to use AI at work and need to travel frequently, AI PC would be a good choice. After all, if they can choose, not many people would be willing to carry a "brick" (gaming computer) when going out, right?

Currently, AI PCs on the market can be divided into four main camps according to the processors used, namely Apple, Intel, AMD, and Qualcomm.

Apple: Apple actually started its AI layout quite early, but Apple doesn't seem to like the label of AI, and the results it produces are often named with more detailed names such as "machine learning". For example, Apple launched the Core ML framework in 2017, which is a machine learning framework prepared by Apple for its developers. It supports iOS, MacOS, tvOS, and watchOS. In other words, when developing AI within the Apple ecosystem, there is basically no need to consider compatibility issues, and development can be carried out according to unified specifications. In addition, Apple has introduced the concept of "unified memory" since the M1 chip. Using unified memory to run large models will have obvious advantages in bandwidth and capacity.

Intel: Intel has launched the Intel Core Ultra processor specifically for AI PCs. In addition to the CPU and GPU, this processor also integrates an on-chip AI accelerator for the client, the Neural Network Processing Unit (NPU). However, it should be noted that it is usually not only the NPU that processes AI applications, the CPU and GPU also assume the corresponding AI acceleration function.

AMD: AMD's AI PC processors utilize three computing engines: a CPU based on the Zen architecture, an integrated or independent GPU based on AMD RDNA, and an AI engine (NPU) based on AMD XDNA. XDNA is a new architecture proposed by the company after the acquisition of Xilinx. It is an adaptive data flow architecture that is believed to significantly change the PC experience. AMD XDNA can also optimize the efficiency of AI algorithm operation, thereby reducing power consumption.

Qualcomm: Recently, Microsoft launched the AI PC products Surface Pro and Surface Laptop based on Qualcomm Snapdragon X Elite and Snapdragon X Plus processors. Microsoft's AI PC seems to be more inclined to "ecological empowerment" in its publicity. Specifically, Intel and AMD often focus on the advantages of local deployment of AI when promoting AI PC. However, Microsoft does not seem to care much about the local deployment of AI. For example, Copilot, which Microsoft focused on introducing at the press conference, has many functions that require the server to upload data for processing, which is equivalent to taking the path of "cloud AI". However, it is understandable for Microsoft. After all, Microsoft is a system manufacturer, and it certainly hopes to promote the development of Windows and Copilot ecology.

Conclusion

1. For those who want to try AI technology on their computers, "cloud AI" such as ChatGPT and Wenxinyiyan is the first choice. After all, it is cheap and easy to use.

2. For those who are concerned about the risk of information leakage, deploying AI locally based on existing hardware is a good choice. The advantage is that there is no need to purchase additional equipment, and owning a "gaming computer" can usually meet the needs. The disadvantage is that this type of "gaming computer" often lacks portability and is not convenient for mobile use.

3. For those who need to use AI when they are at work or on a business trip, AI PC will be a good choice. After all, AI PC is generally thin and portable, and taking AI PC on a business trip can significantly reduce the pressure on our shoulders.