Zhao Lihong Senior Town Planner

Wang Peng Senior Expert of Tencent Research Institute

At the end of 2022, ChatGPT came out of nowhere to let thousands of industries see the inflection point of general artificial intelligence. Subsequently, the GPT-4 series demonstrated outstanding capabilities in high-quality text generation and inference analysis, LLaMA expanded the ecosystem of wide-ranging applications, Sora video generation made a stunning debut, and general-purposeLarge Model We continue to be pleasantly surprised by the rapid increase in the architectural capabilities of Transformer in particular.

However, despite the excellent capabilities and rapid progress of generalized big models, they still have considerable limitations when facing problems in specific industries or domains. Before the goal of AGI (General Artificial Intelligence) is realized, we still need to explore the issue of recent industry application patterns of big models. Building industry big models and domain big models is a realistic choice. If the generalized big model is a high school student who has completed general education, we hope to let it learn some more domain-specific knowledge and skills and become an undergraduate or even a graduate student in the field to provide more professional and precise help for solving domain problems. For the city, we even hope to utilize the machine's crushing storage and computation advantages over the human brain to solve those complex system problems that the human brain cannot solve. By buildingCity Model, may be able to help us solve urban problems, help urban development, assist in planning decisions, and enhance governance effectiveness.

What can big urban models do? What are the technology-driven changes for urban governance? What problems have arisen? How to build big urban models?Iterative updating of technology is very rapid, many issues can not yet see a definitive conclusion, but after more than a year of practical exploration, there are still some directions and trends have been revealed.

I. Cognition:

What is the Industry Megamodel

Which of the following is a large model of the industry as you understand it?

- Start from scratch with domain-specific data, start with pre-training, and make big models.

- Learning industry-specific data and expertise on the basis of a generalized grand model, i.e., an industry grand model that has been fine-tuned with industry knowledge on the basis of the grand model.

- Application development to solve some specialized problems based on the underlying big model capabilities.

At present, there is no precise definition of the industry large model, and all three of the above will be called the industry large model. From the general user's perspective, if you only care about the usage effect, you don't need to care about which one actually does it. However, if you want to know a little more about the technical route, or care about your own (or the industry's) data assets and knowledge deposits, then you need to distinguish which of the above three.

1. Pure and simple

Start from scratch with domain-specific data, start with pre-training, and make big models.

Think about if you can get a pure rooted red city model, no messy data noise, it is familiar with the history of urban development, understand the law of industrial and economic development, master the current situation of all aspects of the city, it is best to understand the interests of the different subjects of the game, adhering to the value of people-centered ...... Whether it is empowering urban governance, public service, or leadership decision-making, it is excellent.

This is not technically impossible, but the problem is that the cost is too high to be feasible. Industry data, arithmetic costs, and technical thresholds are all problems. There is not enough data to talk about big.The official training data of GPT-3 is 753GB, LLaMA4828.2GB, and Wenxin a billion words. Converted into our longest general regulation manual, with 10W words a calculation, 1000GB is more than 50 billion general regulation manuals. In addition, the high arithmetic cost, complex underlying technology, all make the threshold of training the industry model from zero is too high to touch, no cost-effective.

The first half of 23 years, that is, the big model of the hottest time, a city leader asked a few big factories: whether they are willing to train a big model for a certain city, or government government affairs specifically? The answer, of course, was no.

At present, except for biological genes, proteins, molecular structure, temporal sequence, space-time and other special modalities of the large model, it is not necessary to build a large model of the industry from scratch, and is not consistent with the nature of the large model of the main ability to come from the "pre-training".

2. Fine-tuning

Learning industry-specific data and expertise on the basis of a generalized grand model, i.e., an industry grand model that has been fine-tuned with industry knowledge on the basis of the grand model.

Probably more in line with most people's perceptions. Relative to the development of a new big model, fine-tune the existing general big model is much simpler and faster, only need high-quality industry data can be. This concept was widely publicized in the first half of the time, so much so that almost the vast majority of party A customers like to ask: what data is used in your industry model, how to fine-tune out, before and after the adjustment of the effect of what is the difference?

However, this industry large model technology route also has applicable range selection conditions after considering the following three factors: data knowledge, parameter scale, and underlying model.

(1) High-quality industry data is obviously quite important in determining what kind of industry knowledge is to be taught to the larger model.Of course really mining, integrating and utilizing industry data is a very complex matter. In the urban domain, probably the only types of explicit knowledge are planning text descriptions, policy documents, and regulations. Of course, we believe that the most important common knowledge in the city is spatial knowledge, which is usually suitable to be delivered through a multimodal approach, and is not mentioned here for the time being. In urban planning and design, the experience and knowledge such as "this design does not feel good", which needs to be learned by the way of "realization", cannot be learned by the model. Therefore, after sorting out and understanding the industry/domain knowledge, we will find that the knowledge that the big model can learn is very limited, and what it can do is also very limited. Generalized AI is far from being that generalized, and presumably the AI that can understand this is AGI.

(2) Parameter Scale and Intelligent Emergence.GPT3.5, Wenxin Yiyin, Tongyi Qianqian, and GLM are all in the hundreds of billions of parameter sizes. It is generally believed that "intelligent emergence" occurs only when the parameter scale reaches hundreds of billions (it is also believed to be smaller scale, such as 50-60 billion). In the face of complex urban systems, intelligent emergence is a capability we need very much. The industry's large models are usually tuned based on models with 10 billion or lower parameter scales. Because only in this way can we achieve better tuning results, efficiency, cost-effectiveness, and the possibility of private deployment. So are big models no problem, but can only do the shape but can not be similar to God, difficult to achieve our expectations of the degree of general artificial intelligence smart.

(3) Basic modeling capabilities and fine-tuning to gain competence.There is a common scenario where one goes to the trouble of tuning multiple rounds, only to find that those finely tuned capabilities are surpassed by the base model as soon as the base model capabilities are upgraded. So some people think that it is better to tune the industry model than to wait for the upgrade of the base model capability. It is not necessary to tune the industry model when the base model capability is still far from seeing the boundary. Another situation that often happens is that after one task capability is upgraded through fine-tuning, other capabilities drop dramatically. This is contrary to the "generic" specialty of the big model, and can only be solved by algorithmic scientists.

Therefore, when delving into the fine-tuning of industry macromodels, the question often arises that "industry macromodels" do not exist. It is even argued that no attempt should be made to give the large model a special capability.

But for specific industry sectors, we just want the big model to be "longer" in some ways than it can be with the big model. This brings us to the third type of industry large model.

3. Applied

Application development to solve some specific problems based on the underlying big model capabilities.

Many people's first reaction may be that this is called some kind of big model of the industry, but it may really be the most reliable at this stage, and is becoming more and more mainstream form. The concern is not the model itself, but the specific task to be accomplished. For specific tasks, the use of large models to understand, memorize, generate, reasoning and other basic capabilities, and other tools, or other model combinations, to develop applications.

The so-called specific problems, combined with urban specialization, can be divided into two categories: management and production of knowledge, and operational problems. Knowledge management and production, the use of large models to retrieve questions and answers, content creation capabilities, to solve the planning and design process of the creative process, normative issues. Operational problems, such as automated drawing modeling, use the big model to complete the chain of thinking and action of task understanding - instruction generation - call-up service, and improve the efficiency of operational work.

For the management and production of knowledge, in addition to the big model, it is also necessary to build an industry knowledge base; for operational issues, the focus is on the docking framework of the system interface as well as the transformation of the existing interface to fit the big model. All quite troublesome. These in the third chapter and then do expand. In addition, for complex scenarios, application and fine-tuning can also be combined to play a role.

Looking back to 2023, the big model has experienced the beginning of the 100 model war "roll model", the middle of the year with industry data "roll training", the end of the year to find that the effect can be put on the ground still have to "roll application". Three stages. This also happens to echo the above three industry big model.

Technology is rapidly evolving and cognition is constantly iterating. We are looking forward to both further breakthroughs in the underlying technology of big models, as well as the digital precipitation and intelligent reconstruction of industry knowledge, domain knowledge, and expert knowledge, and the emergence of industry-specific applications and even super Apps. Bring something to the city that needs to be renewed and the economy that needs to be stimulated.

II. Applications:

The Great City Model and its application scenarios

1. How to define the urban megamodel

The above conversation is about the perception of the industry's big models, focusing on the "big models". But in fact, the word "urban" is more difficult to define.

Professionals oriented to the urban planning and construction industry have a largely uniform perception of cities. A city is a form of human settlement, a center of economic and social activity, a collection of buildings and facilities, and a territorial political entity .......

However, once out of this circle, it is very difficult to unify everyone's perception of "city". Whether facing information technology vendors, artificial intelligence technology experts, or even city governments, the "city" in everyone's mind is not the "city" in the planner's mind.

The concept of city is too abstract, and its connotation and extension are too rich. The content of urban research involves scientific and technological civilization, industrial consumption, ideological humanity ...... It seems that anything can be fitted in with the times. This discipline is so different that it may be embarrassing for any other discipline except philosophy to be involved in such a rich category.

In the commercial market, however, there is no need for such richness of connotations and extensions, only for clear transactions and profits. In the commercial market, the "city" is a type of business whose customer is the city government, which pays the bill.

In this way, the big model of the city becomes a big model built at the government's expense. On first hearing, this definition is too straightforward. But when you think about it from a different perspective, if the government pays for it, it means that it is an area of public service or public management where the market fails.So the Big Model of the City is the Big Model applied to the field of public services and public administration in the context of market failure, and that's a fair definition.

another perspective that does not start with the market, but centers on data and knowledge.Define the urban macromodel in terms of Habitat knowledge, or the Habitat industry macromodel.

In planning, design, construction and operation and maintenance, there are a large number of each other related to policies and regulations, standards and norms and other common knowledge of the industry, the collation of the workload is huge and there are copyright issues affecting the promotion and application.In order to encourage all subjects to apply or build segmentation and enterprise models, the industry authorities should organize relevant content and technical resources, integrate the existing practices and products in the industry, and put forward a reasonable and open architecture of the industry's big model under the premise of clearer judgment of the top-level design of the industry's technological development, on which enterprise models can be further structured, and which can even become a platform for the industry's knowledge in a marketable way. It can even become a platform for industry knowledge flow and sharing in a marketized way.

The applications described below will be dominated by market-defined city big models, which are the engineered, scaled and marketed applications seen on the ground so far. The city models defined by data and knowledge, on the other hand, are mostly experimental and exploratory, and may require more profound breakthroughs in the industry's organizational models and operational mechanisms before they can enter the landing stage.

2. Scenarios for the application of existing urban macromodels

Since it is "existing" and "application", we do not talk about concepts, models and visions, but about grounded things. Although the big model technology seems to be very lofty, but in contrast, sometimes people's expectations are more lofty. Existing applications are not sci-fi at all, so please be prepared, don't be too low, the reality is bone dry.

There are so many categories of application scenarios that we have already seen this year:Assisted Decision Making, Agile Governance, Government Services, etc.

(1) Supported decision-making

The whole decision-making thing is a bit complicated. We're talking about planning decisions that are, at their core, the result of a trade-off game. The current big models obviously can't do this. Technically speaking, artificial intelligence can be divided into four levels of computational intelligence-perceptual intelligence-cognitive intelligence-decision-making intelligence (also divided into three levels, decision-making intelligence as the advanced stage of cognitive intelligence), the big model promotes the enhancement of cognitive intelligence from perceptual intelligence to cognitive intelligence, but there is still a considerable distance from decision-making intelligence.

But assisted decision-making is much simpler, providing similar cases, data analysis, policy analysis are all assisted decision-making.AI, with its storage and computational advantages, is theoretically able to provide more comprehensive and rapid assisted decision-making support. "AI is responsible for being comprehensively correct, and humans are responsible for making tough choices" is the ideal interface for the division of labor between AI and humans in the decision-making process.

However, whether it is a case policy text or an indicator value, being comprehensive and correct presupposes the input of data and knowledge first, such as case bases, policy libraries, and databases. None of these libraries require manual labor for their construction. Although this construction process can also be empowered by tools of AI technology such as big models, the input of manual labor and expertise is always missing. If you want to utilize more of AI's capabilities, you need to do this knowledge engineering or data governance work first.

In recent years, information technology projects such as City Brain and Industry Brain have accomplished the convergence of some urban data to a certain extent.So at present, the easiest application scenario is to organize the convergence of these databases, with the ability to use the big model for semantic interaction based on the flexible generation of data analysis, you can realize that the leader asks at will, the big model instant answer. To a certain extent, this is an upgrade of the previous generation of "leadership cockpit".

The city physical examination, too, uses data to reflect the state of the city. However, since the indicators of the city checkup are too few to reflect the advantages of mass computing at all, the application value of the big model is minimal. The interaction and flexible generation of big models are only valuable when there are thousands of data indicators.

Data analysis generated flexibly by big models has considerable limitations as decision support. Although the big model has a certain ability to generalize and understand, it cannot deeply understand the complex relationship before each indication. Especially in the face of complex urban systems, system elements are organically related to each other.The big model can only perform superficial metrics calculations and cannot understand and analyze causal, correlation, and other correlations. Its advantage is that it is fast enough, not sophisticated enough.

How to further deepen the knowledge? We need to teach the expert knowledge to the AI, and the "urban meta-indicators" we proposed last year is an urban knowledge project that allows the AI to understand the data indicators more deeply, and to build a data structure based on the logical relationship between the data indicators, rather than a simple table.

On the other hand, we can allow AI to capitalize on its strengths and weaknesses, and do more with "fast enough". This leads to the next type of application scenario - agile governance.

(2) Agile Governance

The fourth industrial revolution has driven changes in the way of human production and life with unprecedented speed, breadth and depth, and the accompanying high degree of complexity and uncertainty has also led to the public administration model and organizational decision-making model of traditional government governance being too rigid to cope with the new needs, new problems and new challenges.

In order to cope with the rapidly changing external environment and the random emergence of immediate urban problems, a variety of governance logics have been put forward by the academic community, and agile governance has emerged in this context. Agile governance was formally proposed in Agile Governance:Reconfiguring Policymaking in the Era of the Fourth Industrial Revolution, which was released at the World Economic Forum in 2018, but its ideas and practices can be traced back to the turn of the century or even earlier.Agile Governance "aims to build a governance model that can respond quickly and responsively to the needs of the public to improve the operational efficiency of the organization and improve the user experience."

On the object of governance, agile governance emphasizes people-oriented and user-oriented. On the governance tempo, it emphasizes rapid response and early intervention; problem identification cannot be done completely at once, but response is more important than silence. On the governance approach, it emphasizes flexible adaptation, progressive iteration, non-linear decision-making, and execution is decision-making. The governance relationship emphasizes two-way interaction, equal participation in decision-making, and real-time dynamic feedback and evaluation of policies.

Numerous mega-cities at home and abroad have realized first-time access to social demands through the operation of city hotline platforms, such as the New York municipal hotline 311 and the Beijing municipal citizen service hotline 12345, which are typical practices of agile governance by responding to demands quickly from the source. Although the merits and demerits of such practices are still debated in the academic community, their complementary significance to bureaucracy and elite governance cannot be denied.

For the extensive collection and rapid response to these problems and demands, the big model technology can provide good support, including the rapid identification of problems and demands, summarizing and refining, dispatching and distributing, and disposal suggestions based on past case experience, regulations and rules.Although some functionality can be accomplished based on small models, large models provide greater efficiency and a better experience. Here again, a certain amount of knowledge input is required, and multimodal large models may also be involved.

(3) Government services

This direction is relatively specific and is singled out because it is generally recognized that the"Intelligent Q&A" is the most mature big model application.It also has the attributes of a 2C service, or what is known as a G2C service, compared to the more 2G attributes of assisted decision making and agile governance. It is easier to get results that are perceptible to the people. It is also true that it is one of the first class of urban big model projects that started to be validated on the ground in 23 years.

Government services refer to administrative services such as licenses, confirmations, rulings, rewards, penalties, etc. provided by governments at all levels, relevant departments and institutions for social organizations, enterprises, institutions and individuals in accordance with laws and regulations. The online platform for government services, by linking the information systems of different departments and connecting online and offline services, reduces cumbersome formalities and processes, allowing the public and enterprises to handle all kinds of affairs more quickly and improving the efficiency of administrative services.

The big model can learn all kinds of policy documents, laws and regulations, office guide, provide policy consulting services for the masses, guide the processing service, for enterprises can also provide policy interpretation and redemption match.

Although "intelligent Q&A" is considered the most mature application scenario, if you are not satisfied with the brain-dead answers and pursue better results, you still face many technical problems and also need the support of business experts. The first step is to gather all the policy documents, laws and regulations, and business guidelines. Then there may be similar yet different provisions in these materials, maybe even somewhat contradictory provisions, and there are also some time-sensitive policies, continuity, and relevance, which are not easy to deal with. Ideally, we would like to have a policy knowledge base, which is not simply a folder with a bunch of texts, but a knowledge-structured library with real-time updating, intelligent retrieval, semantic understanding and other functions.

It is not easy to establish a knowledge base for government services. From the business perspective, it is necessary to study the boundary of knowledge scope, collect knowledge data, and grasp the knowledge structure. From the technical point of view, it includes data collection (import cleaning, document parsing, language cut), knowledge production (modeling, fusion, disambiguation), knowledge enhancement (vectorization, similarity calculation, inverted index), knowledge management (retrieval, intervention, traceability analysis) and other technical processes. In the process of knowledge base construction, it is also necessary to be empowered by big model technology to reduce manual input and improve construction efficiency.

Similar to the Urban Meta-Indicators Knowledge Project, this is also a more complex systematic project.

Let's apply the same technological thinking to a broader range of policy documents, not limited to those for public services and business services, then this smart Q&A can support a much broader range of policy formulation and evaluation, not just government services.

(4) Other

The urban big model defined with data and knowledge as the core involves a number of specific fields such as planning, architecture, transportation, municipal, real estate, property, etc., and there are attempts to apply the big model in various fields. Due to space limitations, only a brief description is given here, and a detailed explanation will be given in the "Generative AI Habitat Field Application Trend Research Report" to be released by Tencent Research Institute.

In the planning phase, based on vector databases and the Open Data Set for Urban Planning.The planning big model has realized the three major functions of knowledge retrieval, text generation and information fusion, which help planners in the preliminary research, information summarization and organization, and also have the ability to generate a variety of results text.

For the spatial and spatio-temporal data specific to the human settlements industry, which cannot be directly understood by big language models for the time being.The Great Model of Urban Cognition.The limitations of spatial understanding of large models are broken through techniques such as spatio-temporal knowledge mapping.

Image Generation ModelIt has been widely used in the design field, which enables one-click generation and free exploration of inspirational ideas, and online editing and local re-generation of the results. In particular, the cloud model training function allows users to train their own style models using images.

Sora's presence gives us a glimpse of the video to theThree-dimensional large modelThe maturity of technologies such as NeRF and Gaussian splash have made it possible to perform 3D reconstruction of spaces more efficiently. BIM-level editable 3D models can be automatically generated from text, images, sketches and videos, and I believe that generating 3D architectural solutions directly from verbal descriptions is not too far away.

In the field of property services, a combination of real and virtual super housekeeper "1+i" service model is also being formed, with real housekeepers providing offline services and digital housekeepers acting as digital twin assistants. Even equipment operation and maintenance can be supported at an expert level through a large model.

The Transformer model, based on the attention mechanism, has the ability to understand massive natural language, and can carry implicit spatial knowledge such as history and culture, life experience, regional characteristics, and structured knowledge such as regulations and norms; while the Diffusion model, through the learning of a large number of works, can form a specific design style and create image-based or even 3D creations.As a result, big language modeling and generative AI will not only change the shape of design tools, but may also create unprecedented spatial features in new ways. Designers will no longer be limited by traditional creative thinking patterns and technologies, but will be able to open up new design fields and creation methods with the powerful capabilities of AI. From creative sketching to refined design, from local remodeling to urban renewal, from monolithic buildings to urban planning, big language modeling and generative AI will have a profound impact on all stages of design.At the same time, the creation results of generative AI can also promote the progress of design theories and methods to form a more intelligent, efficient and humanized design system.

Through the comprehensive description and understanding of the base as well as the surrounding spatial and temporal elements, coupled with the knowledge engineering of norms, standards and expert experience, the direct generation of design solutions may be realized in the future based on Agents and other means. Of course, for many projects, the process still requires strong involvement of architects and owners. This generation may be from the conceptual program to the three-dimensional model, and then reverse to generate a variety of engineering drawings, and associated costs, product interpretation and other information, the production and use of BIM logic will also be fundamentally changed.The bottom-up effect of AIGC in the future will not only improve the overall design level of the industry, but also facilitate broader public participation by generating more complex simulations of social operations and spatio-temporal behaviors through generating agents and social networks, for example.

At this stage, these "big models" are being applied separately in various fields and segments, but ultimately.Taking urban space as the core, integrating all spatial knowledge into a generalized "big model of the city" as a common infrastructure for all related fields is the only way to maximize the value of the big model.

The implementation of a broad urban big model requires top-level design and overall planning by industry authorities to ensure compliance with the industry's common knowledge base and to promote the establishment of a scalable and synergistic technical architecture for the industry's big model, and at the same time, to explore a sustainable operation and service model. This architecture and model should promote effective communication and collaboration among all links in the industry chain, enabling each link to independently construct and apply its domain model and enterprise model, and to realize cross-domain synergy and integration, so as to enhance the competitiveness and innovation capability of the entire industry. This kind of integration is especially necessary in the urban sector, where there are many fields and a high degree of synergy.

III. Outlook:

Expected value of a large urban model

Game Story 1 - Stamford 25 Town

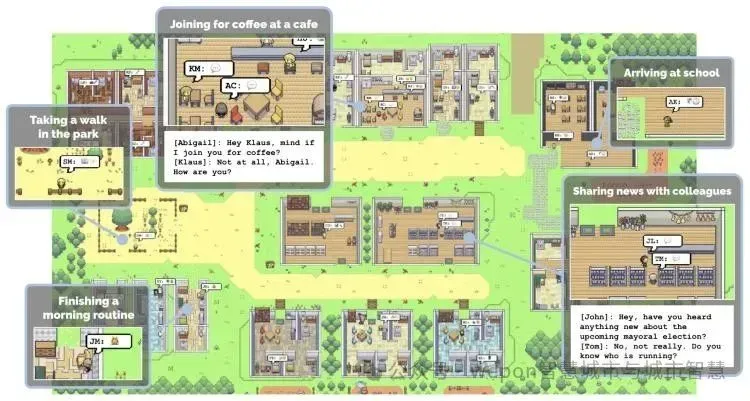

In April 2023, researchers from Stanford and Google successfully constructed a "virtual town" that triggered the AI circle. There are 25 AI intelligences in the virtual town. These generative intelligences have different identities, such as a drugstore owner, a university professor and his beloved wife, a son studying music, and a neighbor couple. Their behavior will match their identities. They can interact with their environment, such as having appropriate behaviors in different places such as cafes, bars, parks, schools, dormitories, houses, and stores. Seeing a leaking bathtub will lead to finding tools from the living room and trying to fix the leak. What's more, they develop "emergent social behaviors", spreading information to each other and collaborating on events such as a Valentine's Day party (https://arxiv.org/pdf/2304.03442.pdf).

Previously, the simulation of such complex systems and social behaviors was very difficult, both in the field of computing and in the field of urbanism and sociology.Problems ranging from metacellular automata CA to multi-intelligent body systems MAS can only be modeled for relatively simple systems. The need for intelligences to be cognizant of their own identities, to have memories, to behave coherently, and to collaborate with others, coupled with extremely high spatio-temporal complexity, make such problems usually non-computable. But based on the large language model, such dynamic and complex interaction simulations unfolding over time are realized.

This is probably what is more in line with the value of big models in the urban domain that we would expect than the grounded status quo application above.

1. Underlying algorithms: facing up to "emergence"

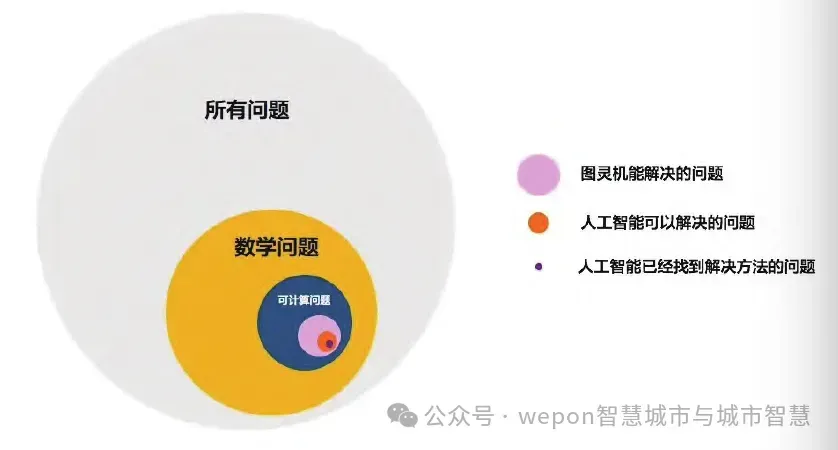

A computable problem is one that can be solved by an algorithm or program. However the vast majority of problems in the real world are not computable problems and cannot be solved by computation.

How many urban problems can be abstracted into computational models and solved by computation? In a planner's intuition, we might think almost none. It may partially exist in specific verticals, such as transportation, energy resource supply, etc.However, the emergent problems faced by the city as a complex system, i.e., problems arising from the coupling of multiple subsystems, are difficult to abstract into mathematical problems.

The scope of computable problems has and is still rapidly expanding as algorithms improve, data volumes grow, and computational power increases. The example of Stanford's town of 25 people shows us the dramatic expansion of the boundaries of computable problems by the entirely new possibilities opened up by big modeling techniques.

The magic of big models is "intelligent emergence".In the past, AIs were taught to learn what they were taught, and what they weren't taught they didn't know. But when the number of participants reached a certain scale, suddenly found that the things that have not been taught are suddenly self-explanatory. Whether it is a city or a large model, "emergence" is one of the basic attributes of complex systems. Doesn't it sound wonderful to use the intelligent emergence of large models to cope with the emergence of problems in complex urban systems?

In fact, the whole world is still confused about the theoretical workings of the big models. The so-called "emergence" is just that the mechanism has not yet been clarified, and the explanation is not clear, so it is called "emergence". But often we want to be "explainable", both in the study of urban problems and in the study of the workings of large models.

However, on the other hand, whether we are doing urban design or making planning decisions, we are actually not pursuing the only correct solution, but often just proposing a relatively balanced and reasonable program that becomes a platform for discussion and consensus. In this sense, the ability of the big model is a good match: more efficient decision-making can be achieved through human-computer collaboration to complete the simulation and extrapolation of complex systems. However, it should be re-emphasized that big models should not try to pursue the "only correct solution".

2. Application architecture: AI Agent and RAG

(1) AI Agent

AI Agent is the big model application architecture that has received the most attention in the industry. According to Wu Enda, if you are looking forward to a better big model such as GPT-5, you can actually use Agent to get similar better results.AI Agent is driven by a big language model as a brain, and has the ability to autonomously understand, perceive, plan, memorize and invoke tools. Its application direction is roughly divided into two categories: automated intelligences and anthropomorphic intelligences:

Automated intelligences, designed to automate complex processes. When given a goal, intelligences are able to create tasks on their own, complete them, create new tasks, reprioritize their task list, complete new top priorities, and repeat the process over and over until the goal is accomplished. For example, tell the machine: design a bedroom of a specific size, with what features and furniture in it. The machine can automatically generate instructions based on its understanding of the task requirements, invoke drawing software, and operate autonomously to draw the design. Automated intelligences may thus bring about a change in the way the software industry interacts. In the near future, it seems that its difficulties, in addition to the large model capabilities, also lies in the docking framework of the system interface and the appropriate large modeling transformation of the existing interface.

Anthropomorphic intelligences, designed to simulate human emotions and interpersonal interactions, usually require little generative accuracy. The uncertainty of large models becomes an advantage here, allowing for diverse simulations. In a multi-intelligent body environment, scenarios and capabilities beyond the original design may also emerge. Anthropomorphic intelligences are becoming the new mental consumer product by providing higher emotional value companionship. And in the field of social simulation and city simulation, which excites urban research, although the Stanford 25-person town has performed amazingly well, in-depth studies with feasibility have not been seen for rigorous planning analysis or even policy decision support.

(2) RAG

If the AI Agent still seems a bit distant, the RAG architecture is a very realistic way to look at it in the short term.

RAG, Retrieval Augmented Generation (RAG). Simply put, through the external knowledge base, additional domain-specific knowledge is given to the large model, from which the large model retrieves the correct answer. It is similar to giving a reading comprehension question to the model, letting it read the given material first and then answer the question. This approach is obviously much more reliable than directly doing quiz questions, and can effectively solve the problems of the illusion of the big model, real-time knowledge, data security, long training time, and the need for high computing power.

The government service scenario mentioned above is based on the RAG architecture. For the broader urban domain, we can realize knowledge management and production by combing and constructing knowledge bases for sub-domains. Here, a specialized knowledge base may be more critical than the big model itself. In finance, law, healthcare, construction and other fields, many industry head enterprises are already investing in the construction of industry knowledge bases, which has also become a new means of precipitating industry/domain knowledge assets and mining data value.

Urban domain knowledge is characterized by high complexity, long-tail fragmented knowledge, and common sense.Synthesizing the discussion of fine-tuned industry macromodels in Chapter 1, we lack both knowledge and attempts to understand what knowledge is suitable for externalization and what knowledge is suitable for internalization of the underlying model, not to mention how to structure domain knowledge. And this, most likely, is the starting point of an industry's integration with big models. In other words, it is the foothold of an industry that can continue to iterate and update in the era of big models.

3. Data literacy: top-level design and industry synergies

Knowledge, or data, is the key to urban big modeling. In reality, the city will be decomposed into various sub-systems such as industrial economy, building planning, transportation and municipal, urban management, emergency response, etc. Each sub-system has the need to build industry big models, and its common spatial attributes will eventually further integrate these big models.

The unique regulations, norms, standards, etc. of each industry, which are the common basis for the industry application of the big model and involve copyright commercial issues, require the competent authorities of each industry to take the lead in the top-level design and overall planning to ensure the compliance of the industry's common knowledge base and to promote the establishment of a scalable and synergistic technical architecture for the industry's big model.Such an architecture should promote effective communication and collaboration among the various links in the industry chain, enabling each link to independently construct and apply its domain model and enterprise model, while realizing cross-domain synergy and integration, thereby enhancing the competitiveness and innovation capacity of the entire industry. In the urban sector, such integration is particularly necessary because of the paradigm and workflow changes involved in a large number of industries.

4. Cost-efficiency: how to deserve it without value for money

For over a year now, industries have been enthusiastic about big models. There are a lot of needs that have been talked about, but very few needs that have been put into practice. On the one hand, because the demand side of the big model technology understanding is relatively small, put forward the need for more "science fiction", on the other hand, for economic considerations, the vast majority of the scene is difficult to build a commercial closed loop. There are technical realization solutions, but not cost-effective. Although we say that the big model of the city is applied to the market failure of public services and public management in the field of big model, commercial realization is not the first priority, but the cost-effectiveness is always not too outrageous.

In fact, not only the industry's large models, the cost, is the current use of LLM modeling applications are unable to bypass the problem. From the training point of view, last year, we in a project, only 30MB of text data, in the 10 billion parameter model on a training cost close to ten thousand yuan. And the training effect is not predictable before training. May be after several rounds of iterative training, still not up to expectations. Although this cost has been and is still decreasing, but still expensive.

From the perspective of application development, the way of charging according to the number of input and output tokens, too complex tasks lead to excessive consumption of Token. It's no joke that a complex task runs up a villa's worth of money in one night.

The deployment and servicing of models is also a large sum of money. While this cost can drop by orders of magnitude from hundreds of billions of parameters to tens of billions of parameters, it is also difficult to see a commercial closure because the value of the effect is not easily assessed.

Considering the energy costs of AI on a more macro level, the math is even worse. Cases like the Stanford 25-person town above exist only in the lab from a cost, efficiency, etc. perspective, with no possibility of getting off the ground.

The human brain has over 10 billion neurons. The big model parameters are on the scale of hundreds of billions, which can be analogized to hundreds of billions of artificial neurons. Currently, human neurons are still far more efficient at synergizing than the big model, and for most specialized tasks, it's still more reliable to be human in the short term.

Large models are only cost-effective if the task is generalized enough to replace enough people, or if the demand for computation, speed of computation, etc. exceeds human limits.

5. From macrolanguage models to cross-modality

In this paper, the big models are big language models, and multimodality is not involved. Multi-modal is an important direction for the future of big models has long been a consensus in the industry, but its arrival so quickly is still beyond almost everyone's expectations. From the literate graph that comes standard with generalized big models to Sora's breakthrough of literate video, which is constantly scaling up under the Transformer framework, more and more rich cognitive capabilities have emerged.

Knowledge in urban areas is naturally multimodal. Planning, architecture, landscape and other design and engineering are based on text and drawings to describe the spatial form, and transportation and municipal fields will have richer special modal data.

Transformer can carry implicit spatial knowledge such as history, culture, life experience, regional characteristics, and structured knowledge such as regulations and norms through textual learning; while Diffusion models can form specific design styles and create image-based or even 3D creations through learning from a large number of design works.Sora showed us that video generation technology Sora shows us that video generation techniques can develop a high degree of three-dimensional consistency, meaning that three-dimensional models can be generated directly through similar methods.

Ultimately, when the Big Model has the ability to understand and create the social space, characterized by language, and the physical space, characterized by three-dimensional space, and connects them together, it has the opportunity to truly understand and create the city of the future.

Game Story 2 - AI Intelligence Voyager Dominates Minecraft

Better to end with a game story.

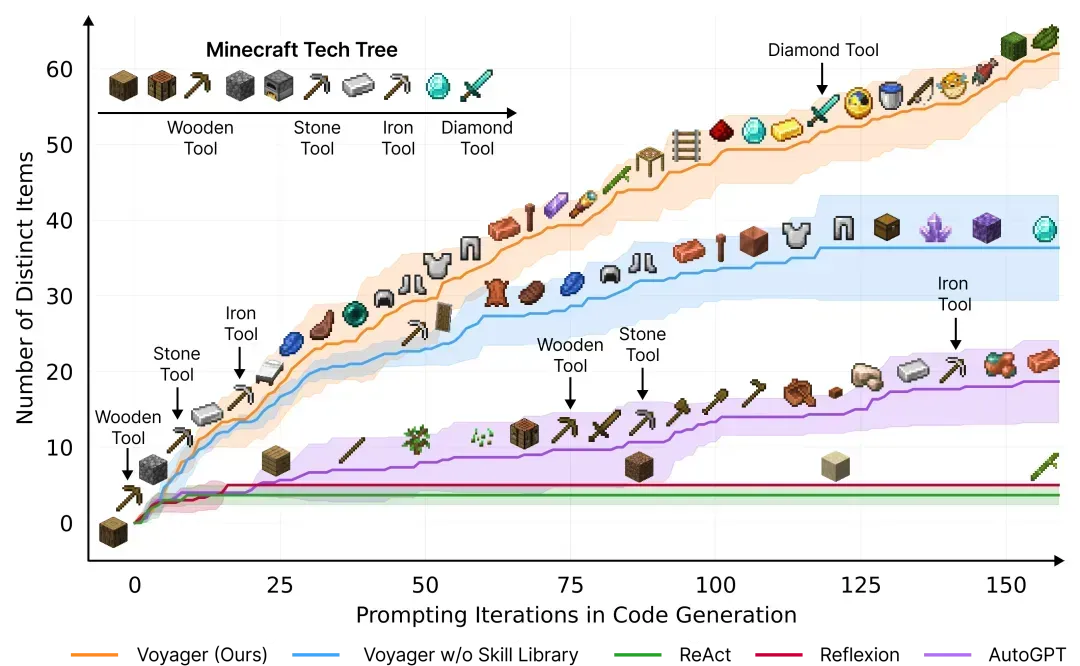

This is also a release from early 23: in the game minecraft my world, an AI intelligence called Voyager, driven by a large language model and capable of lifelong learning, explores the world non-stop using the GPT-4. It constantly develops increasingly complex skills and is always self-driven to make new discoveries without human intervention.

It has mastered the basic survival skills of digging, building houses, collecting, and hunting through independent learning, as well as exploring the magical world through self-drive, traveling to different cities, passing by an ocean, a pyramid, and it even builds its own portals. It will expand its items and equipment, will be equipped with different levels of armor, and will use fences to keep animals captive. In different environments, it will present itself with appropriate tasks, and if it finds itself in a desert rather than a forest, it will learn to collect sand and cacti before it learns to collect iron. Refine skills based on environmental feedback and commit mastery to memory.

To expand on this, we would like to have an AI to whom we give a task: "Improve and optimize the city continuously, and the city will be better tomorrow".It then proposes appropriate tasks according to the current level of technology and the state of the city, which is equivalent to doing urban physical examination and urban planning; then it improves the strategy based on the feedback of the environment, memorizes the mastered strategy and feedback, and repeats the use in similar situations, which is equivalent to the implementation on the ground, and deepens the knowledge of the city and dynamically corrects the planning and strategy in the process of the implementation; because there is no best but only better for the city's improvement; so it will continue to explore the city: to find new tasks with self-driven to make the city better tomorrow. Only better, so it will continue to explore the city: to find new tasks in a self-driven way to make the city better tomorrow.