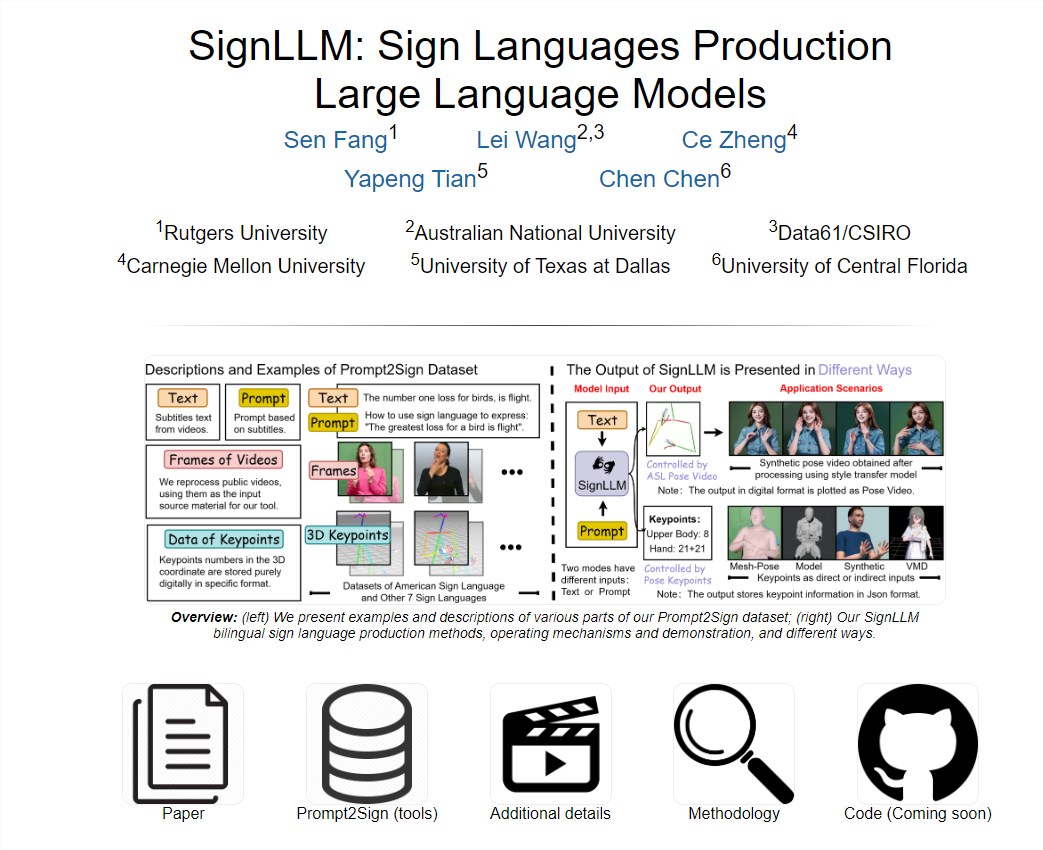

SignLLMIt is an innovativeMultilingual Sign Language Model, which has the ability to be generated by text descriptionSign Language VideoThis technology is a huge advancement for the hearing impaired because it can provide a new way to communicate.

Here are some key features of SignLLM:

Text-to-sign language video conversion: The SignLLM model can convert input text or prompts into correspondingSign Language Gestures Video, which makes the communication of information more intuitive and easy to understand.

Support for multiple sign languages: The model is able to generate eight different sign languages including American Sign Language (ASL) and German Sign Language (GSL), which shows its wide applicability and diversity.

First-of-its-kind multilingual sign language dataset: The SignLLM project introduces the world’s first multilingual sign language dataset, called Prompt2Sign. This dataset is essential for training and developing models that can understand and generate sign language.

Model development based on datasets: Based on the Prompt2Sign dataset, a variety of models for generating sign language were developed, which shows that the SignLLM project has made significant progress in sign language generation technology.

The development of the SignLLM model not only provides an important communication tool for the hearing-impaired, but also promotes the research of artificial intelligence in the field of language understanding and generation. Through this model, we can better serve the multicultural and linguistic communities and promote barrier-free communication of information.

Project address: https://signllm.github.io/