In recent yearsShort VideoIt is very popular, especially with the rise of short video platforms such as Tik Tok. Many people can post their daily life or work online, attracting a lot of attention, and some people have made their first pot of gold in life.

However, video editing is a very time-consuming task, and it often takes several hours to edit a video.

Today I recommend you aAliOpen SourceAutomationVideo Editing Tools—FunClip, which can help everyone edit videos easily.

FunClip is a completely open source automatic video editing tool that can be installed on our own computers and supports offline use. You can also use the FunASR Paraformer series model open sourced by Alibaba Tongyi Lab to perform speech recognition in the video. Then you can freely select the text segment or speaker in the recognition result and click the crop button to get the video of the corresponding segment.

So use FunClip Video editing is very simple. Unlike traditional video editing software, we don’t need to manually split the video.

Based on the above basic functions, FunClip has the following features:

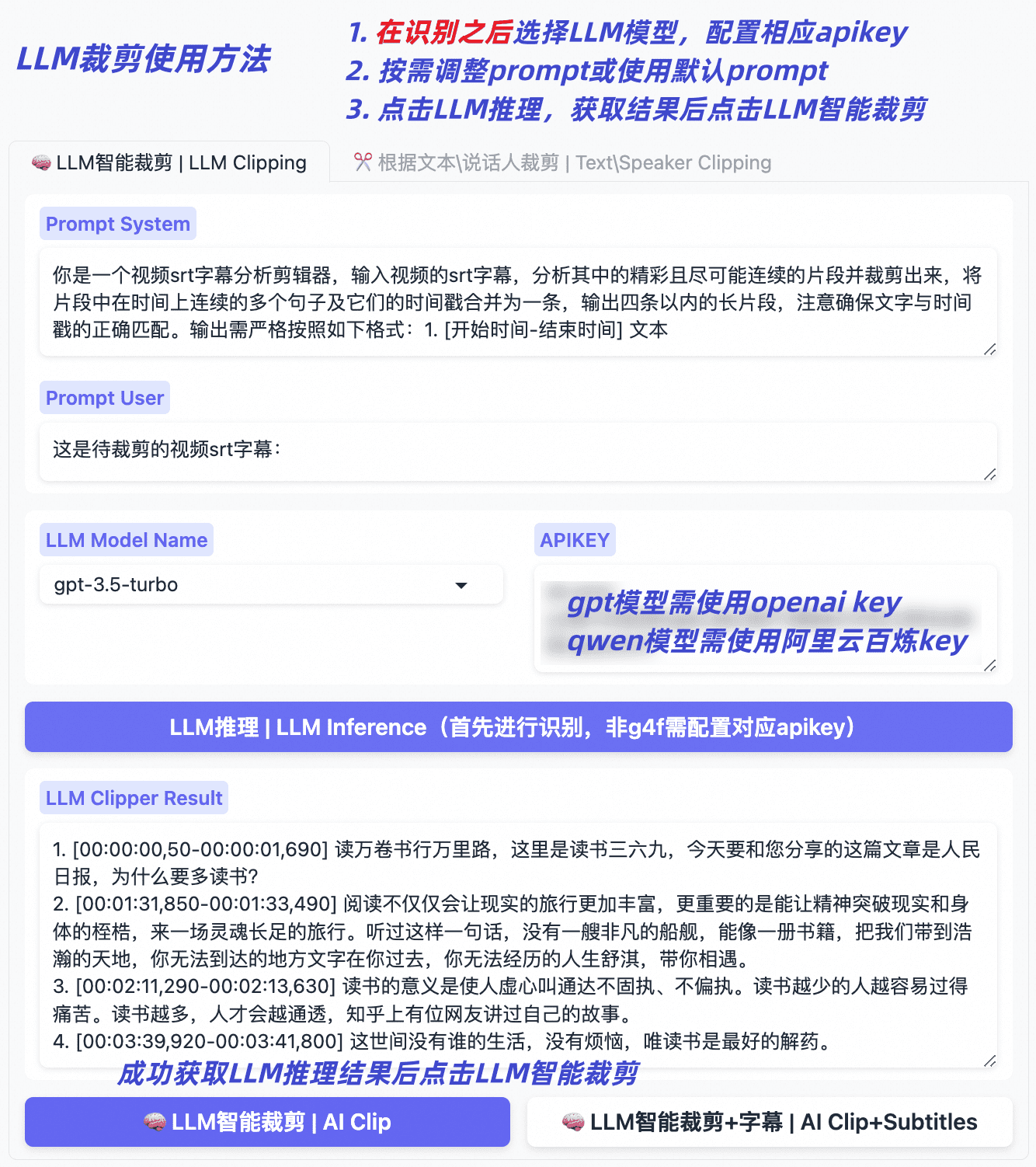

- FunClip integrates the calling mechanisms of many advanced language models and opens up flexible prompt setting functions, aiming to explore new methods for video editing using large language models.

- FunClip uses Alibaba's open source top industrial-grade speech recognition model - Paraformer-Large, which performs well among open source Chinese ASR models. Modelscope has been downloaded more than 13 million times and can accurately predict timestamps.

- In addition, FunClip has also integrated SeACo-Paraformer's hot word customization function, which can specifically specify entity words, names, etc. as hot words during the speech recognition process, thereby significantly improving the recognition accuracy.

- FunClip is also equipped with CAM++'s speaker recognition model. Users can use the automatically identified speaker ID as the basis for editing and easily crop out the part of a specific speaker.

- Through Gradio's interactive interface, users can easily achieve the above functions. The installation process is simple and easy to operate. It also supports deployment on the server and operation through web pages.

- FunClip also supports free editing of multiple videos, and can automatically generate complete video SRT subtitle files and SRT subtitles for target clips, simplifying the entire editing process.

In addition, FunClip has added the intelligent cropping function of large language models, integrated models such as the qwen series and gpt series, provided a default prompt, and we can also train our own prompt. It also supports the SeACo-Paraformer model open sourced by FunASR to further support hot word customization in video editing.

Installation 🔨

Install Python environment

The operation of FunClip only depends on the Python environment, so we only need to set up the Python environment.

# Clone funclip repository git clone https://github.com/alibaba-damo-academy/FunClip.git cd FunClip # Install related Python dependencies pip install -r ./requirements.txtInstall imagemagick If you want to use the video cropping function that automatically generates subtitles, you need to install imagemagick:Ubuntuapt-get -y update && apt-get -y install ffmpeg imagemagick sed -i 's/none/read,write/g' /etc/ImageMagick-6/policy.xml

- MacOS

brew install imagemagick sed -i 's/none/read,write/g' /usr/local/Cellar/imagemagick/7.1.1-8_1/etc/ImageMagick-7/policy.xml- Windows

You need to download and install imagemagick from the following address:

https://imagemagick.org/script/download.php#windows To use FunClip, first start FunClip using the following command: python funclip/launch.pyThen visit localhost:7860 in your browser to enter the homepage.

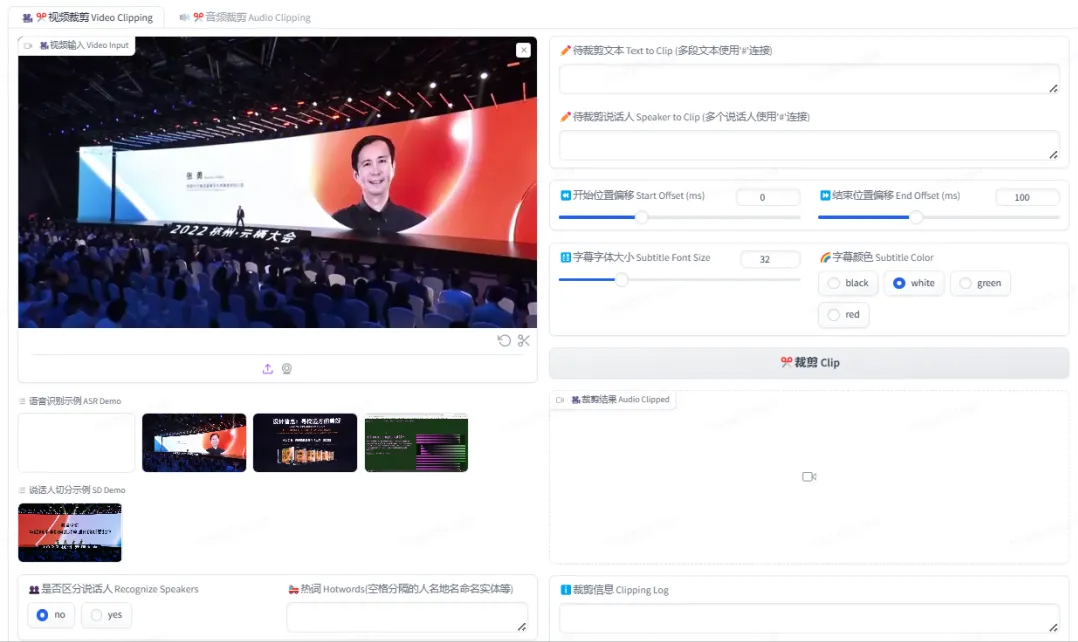

Then follow the steps below to edit the video:

Upload your video (or use the video example below)- Set hot words and file output path (to save recognition results, videos, etc.)

- Click the Identify button to get the recognition result, or click Identify + Distinguish Speakers to identify the speaker ID based on speech recognition.

- Copy the segment in the recognition result to the corresponding position, or enter the speaker ID until it reaches the corresponding position.

- Configure clip parameters, offset and subtitle settings, etc.

- Click the "Crop" or "Crop+Subtitles" button

Please refer to the following tutorial for using large language model clipping:

FunClip has deployed online services in the Mota community, which can be experienced at the following address:

https://modelscope.cn/studios/iic/funasr_app_clipvideo/summary

We can upload our own video or audio, or use the demo provided by FunClip:

For more information, please visit github:

https://github.com/alibaba-damo-academy/FunClip

In general, FunClip is a completely open source, locally deployed automated video editing tool that can help us edit videos more easily and record our beautiful life.