OpenAIrecently faced a succession of security team membersquit a jobThe team includes AI strategy researcher Gretchen Krueger. She joined OpenAI in 2019, worked on GPT-4 and DALL-E2, and led OpenAI's first company-wide "red team" test in 2020. Now, she's one of the departing employees who issued a warning.

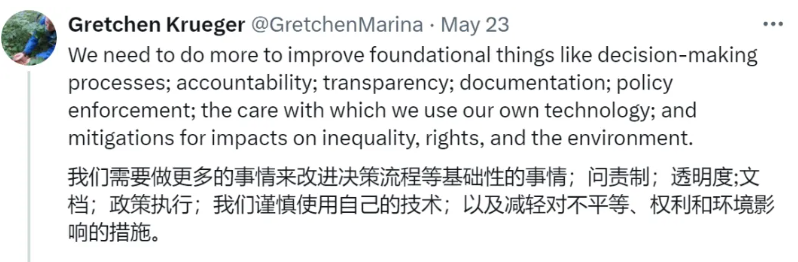

Ger took to social media to express her concern that OpenAI needs to improve its decision-making process, accountability, transparency, documentation, and strategy execution, and should take steps to mitigate the impact of technology on social inequality, rights, and the environment. She mentioned that tech companies sometimes disempower those seeking to be held accountable by creating divisions, and she is very concerned about preventing this from happening.

Ger's departure is part of an internal shakeup within OpenAI's security team. Before her, OpenAI Chief Scientist Ilya Sutskever and Head of SuperAlignment Jan Leike have also announced their departures. These departures have raised questions about OpenAI's decision-making process on security issues.

OpenAI's security team is organized into three main sections.

Super Alignment Team:Focuses on controlling superintelligence that doesn't exist yet.

Safety Systems Team:Focused on reducing the misuse of existing models and products.

Preparedness Team:Mapping Emerging Risks in Frontier Modeling.

Although OpenAI has multiple security teams, the final decision-making power for risk assessment remains in the hands of the leadership. The board of directors has the power to override decisions and currently includes experts and executives from a variety of fields.

Ger's departure and her public comments, along with other changes to the OpenAI security team, have raised widespread community concerns about AI security and corporate governance. These issues are important to people and communities now, and they affect how and by whom aspects of the future are planned.