Hello everyone, this is Stable DiffusionGetting Started》Series to help you understand Stable Diffusion The various models are mainly to help you sort out the various models in Stable diffusion. The model occupies a vital position in Stable diffusion. It not only determines the style of the output, but also determines the quality of the output.

But when I first came into contact with SD, I was overwhelmed by the models in it. Not only are there multiple model suffixes, but there are also many types of models. If you are a novice, it is easy to be confused at this step. In this article, the technical wizard helps you systematically sort out the models of SD. I believe that it will be helpful for both novices and veterans!

1. Let’s start with the model suffix

To better understand the model, let's start with the model's suffix.

In Stable diffusion, there are two common model suffixes, .ckpt and .safetensors.

The full name of .ckpt is checkpoint, which is the format used to save model parameters in TensorFlow. It is usually used with .meta files to resume the training process.

To put it simply, the .ckpt model is like a "save file" for each level when we pass a level in a game. The same is true when you are training a model. There is no guarantee that the training will be successful in one go. It is possible that the training will fail in the middle due to various factors. Therefore, you may save a file once when training to 20%, and save another file when training to 40%. This is one of the reasons why it is called a checkpoint.

When mentioning the .ckpt model, I would like to add a word about the .pt model. As mentioned earlier, .ckpt is the format used by TensorFlow to save model parameters, while .pt is the format used by PyTorch to save model parameters. TensorFlow and PyTorch are both well-known deep learning frameworks, but one is released by Google and the other is released by Facebook.

In addition to .pt, PyTorch also saves models in .pth and .pkl formats. There is no essential difference between .pt and .pth, and .pkl just has an extra step of serialization using Python's pickle module.

Now that we have discussed the .ckpt model, it’s time to talk about the .safetensors model.

The reason for the .safetensors model is that .ckpt allows us to resume training from a previously trained state, such as restarting training from the point 50%, thereby saving more training information, such as the model's weights, the state of the optimizer, and some Python code.

There are two problems with this approach. First, it may contain malicious code, so it is not recommended to download and load .ckpt model files from unknown or untrusted sources. Second, the model size is large. Generally, the size of a single live-action model is around 7GB, and the size of an anime model is between 2-5GB.

The .safetensors model is a new model storage format launched by huggingface, which is specially designed for Stable Diffusion models. Files in this format only save the weights of the model without the optimizer status or other information, which means that it is usually used for the final version of the model. When we only care about the performance of the model and do not need to know the detailed information of the training process, this format is a good choice.

Since .safetensors only saves the weights of the model and no code, it is safer. In addition, since it saves less information, its size is smaller than .ckpt and it loads faster. Therefore, it is currently recommended to use the .safetensors model file.

In general, if you want to fine-tune on a certain SD model, you still have to use the .ckpt model; but if you only care about the output results, it would be better to use the .safetensors model!

2. Let’s start with model classification

In Stable diffusion, models are mainly divided into five categories, namely Stable diffusion model, VAE model, Lora model, Embedding model and Hypernetwork model.

2.1 Stable diffusion large model

This type of model is commonly known as the "bottom mold" and corresponds to the position below.

This type of model represents a knowledge base of Stable diffusion. For example, if we use all two-dimensional pictures to train the large model, the final image effect will tend to be two-dimensional; and if real-life pictures are used during training, the final image effect will tend to be real-life.

Since this type of model contains a lot of materials and takes a long time to train, its size is relatively large, usually more than 2GB, and the suffix is the .ckpt and .safetensors mentioned above.

2.2 VAE Model

VAE stands for Variational autoenconder, which can be simply understood as a filter. In the process of generating images, the main effect is the color effect of the image.

Generally speaking, when generating images, if there is no external VAE model, the overall color of the generated image will be darker; while the overall color of the image with the external VAE model will be brighter.

Note: The left side is a picture without VAE generation, and the right side is a picture generated with VAE

However, it should be noted that some large models have already embedded the VAE effect during training, so even if the VAE effect is not used, the output image will not be so dim.

In addition, sometimes using VAE will result in a bad effect, such as turning it into a blue waste map at the last moment. At this time, you need to change the external VAE to automatic.

Note: This is a blue waste image.

2.3 Lora Model

I believe everyone has seen the Lora model frequently. The full name of LoRA is Low-Rank Adaptation of Large Language Models, which literally means "low-rank adaptation of large language models". This is a large language model fine-tuning technology proposed by Microsoft researchers. Simply put, its function is to make these huge models more flexible and efficient, and to be able to optimize for specific tasks (such as making some modifications to the style) without having to train the entire model from scratch.

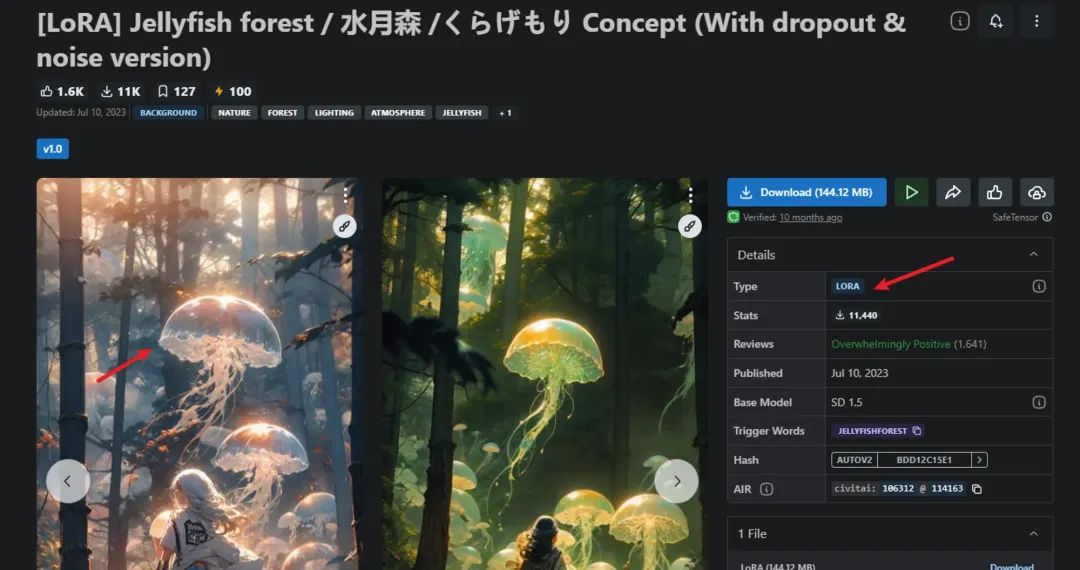

For example, the Lora model below adds some forest jellyfish luminous effects on the basis of the large model (that is, we do not need to retrain our large model to add this effect, because training the large model takes a long time, and Lora can improve efficiency).

It should be noted that the Lora model cannot be used alone, it must be used together with the large model above!

In addition, since Lora is trained with fewer pictures, for example, the Lora above is trained with 100+ pictures, its size is generally not very large, usually between tens to hundreds of MB, which greatly saves disk space.

Finally, some Lora models require trigger words to be activated (that is, add the trigger word to the prompt word), for example, the Lora trigger word above is jellyfishforest.

2.4 Embedding Model

The Embedding model is also called textual inversion. In Stable Diffusion, the Embedding model uses embedding technology to package a series of input prompt words into a vector, thereby improving the stability and accuracy of image generation.

Simply put, if we want to generate the image of Naruto in Naruto through SD, we need several prompt words to describe it, such as what kind of appearance, what color clothes he wears, and Embedding is to package this series of prompt words into a new prompt word, assuming it is called Naruto.

In this way, we only need to introduce this Embedding model and then input the Naruto prompt word to generate the Naruto image we want, which improves the efficiency of writing prompt words!

Since the Embedding model only integrates the prompt words, its size is very small, usually between tens to hundreds of KB.

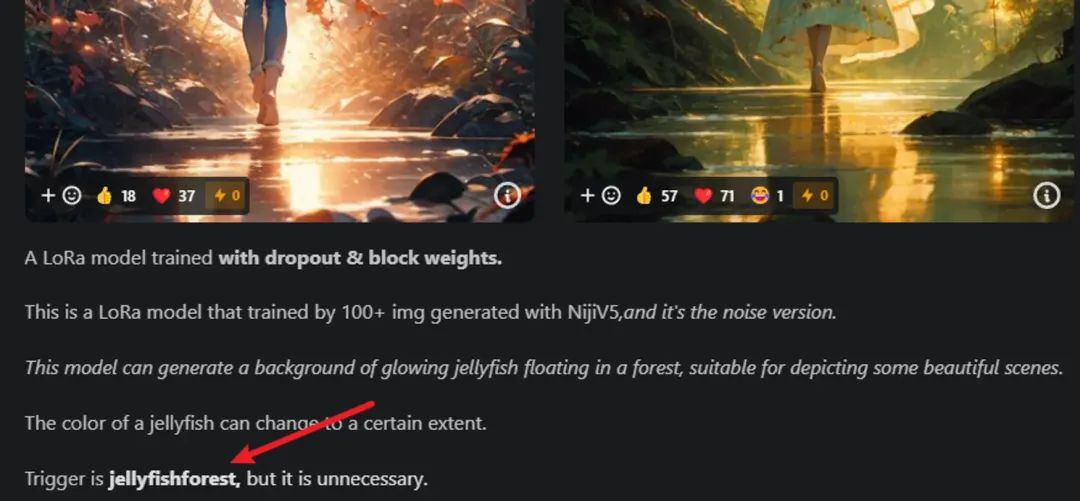

For example, take this Embedding model, which depicts a beautiful woman named Caroline Dare.

When we enter the trigger word, similar beauties will be generated.

Although the generated images are not exactly the same, because it depends on the base template you use,The more obvious features are the same, such as white hair.

2.5 Hypernetwork Model

Hypernetwork can be translated as "super network". It is a neural network-based model that can quickly generate parameters of other neural networks and is often used in Novel AI's Stable Diffusion model.

Hypernetwork can be used to fine-tune the model, for example, in an image generation model such as Stable Diffusion, by inserting a small hypernetwork to modify the output style without changing the core structure of the original model.

The role of this model is actuallyThere is some overlap in functionality with the Lora model, so in actual use, I personally use it less.

3. Model Pruning

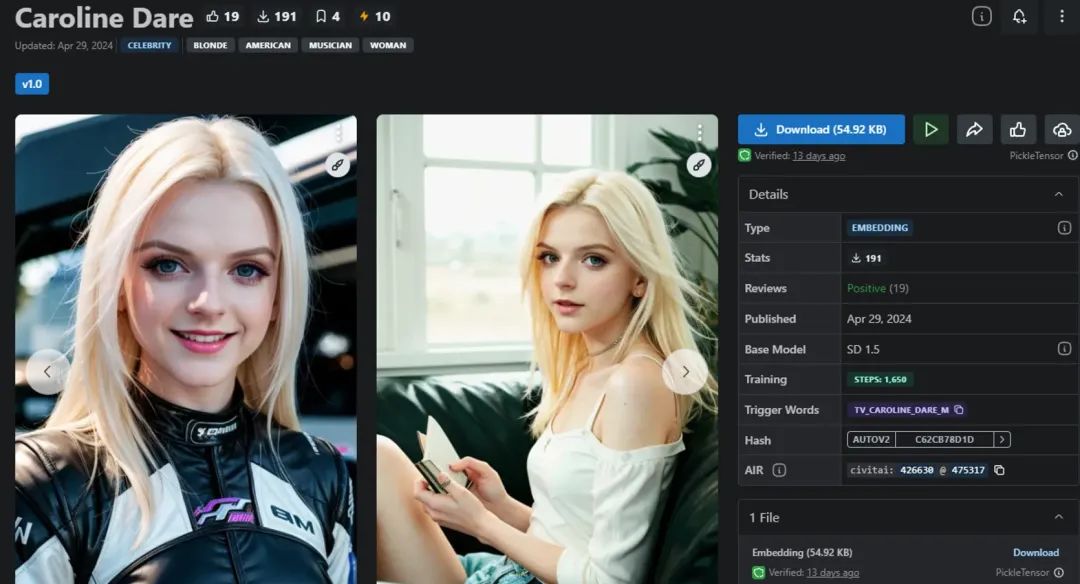

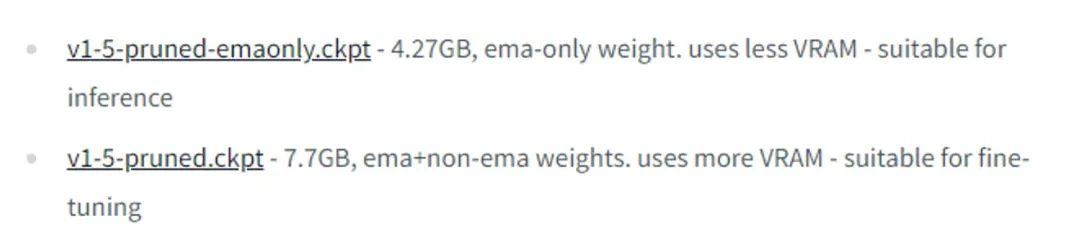

When you download the model, you may see two versions, one with pruned and the other with pruned-emaonly.For example, the following[1]

This is because the large model will save two different types of weights during training: the first group is the weight of the last time, that is, the model with only pruned.

During the training of a deep learning model, the model parameters (or "weights") are adjusted in each iteration (or training step) according to the loss function to fit the data. When the training is finished, the weights of the model are the final state after repeated teaching of all training samples. This part of the weight represents the knowledge learned by the model on the training data, without additional smoothing, and directly reflects the result of the last update.

The second group takes the weighted average of the weights of the most recent iterations. The weighted average is EMA (Exponential Moving Average). The weighted average is used mainly to reduce the impact of short-term fluctuations. The resulting model has better generalization and is more stable, which is the model with pruned-emaonly.

Since the model with pruned-emaonly is smaller, it occupies less video memory (VRAM) and is suitable for direct drawing; the model with pruned is larger and occupies more video memory, so it is more suitable for fine-tuning, as shown below:

4. Introduction to Common Models

In addition to the above classification, stable diffusion models are also divided into four categories based on their uses: official models, two-dimensional models (animation), realistic models and 2.5D models.

4.1 Official Model

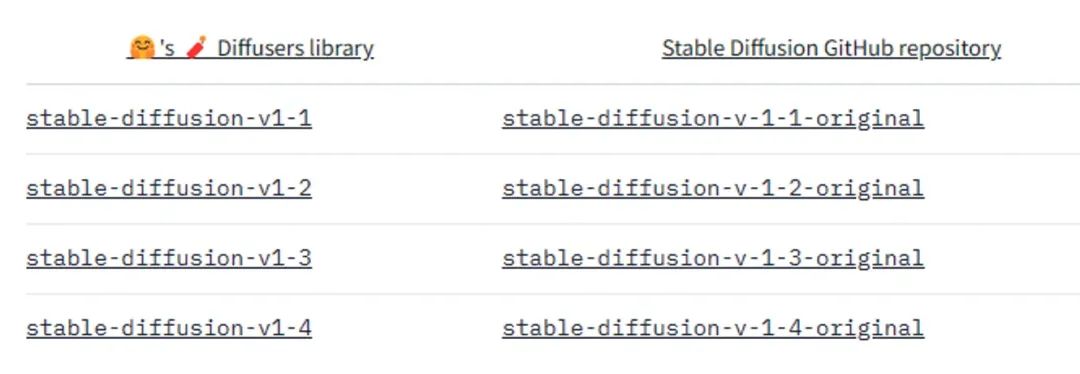

The official model has two major versions, 1.X and 2.X. Currently, there are four official versions released in 1.X, namely v1-1, v1-2, v1-3, and v1-4.

Official Address:https://huggingface.co/CompVis/stable-diffusion

However, 1.X actually has a version v1-5, which was not released by CompVis but by Runwayml. Runway is the company that is famous for Vincent Video. It is said that this version performs particularly well in text-to-image generation tasks and can generate images that better meet user needs (but I haven't tried it).

address:Stable Diffusion v1-5 - a Hugging Face Space by runwayml[2]

2.X There are currently two versions, 2-0 and 2-1, but stabilityai has been released.

https://huggingface.co/stabilityai/stable-diffusion-2

https://huggingface.co/stabilityai/stable-diffusion-2-1

4.2 Secondary model

The most famous two-dimensional models belong to the Anything series (Universal Crucible). For example, the Autumn Leaf integration pack comes with the anything-v5-PrtRE model by default.

Anything series models currently have four basic versions V1, V2.1, V3 and V5. Prt is a special decorative version of V5 and is the more recommended version.

In addition to generating two-dimensional images, the Anything series is also highly adaptable and versatile for portraits, landscapes, animals, etc. It is a relatively general model.

4.3 Realistic Model

Some of the more famous realistic models include Realistic Vision and others. These are some pictures released on Station C. You can see that they are indeed very realistic.

Realistic Vision Model:

4.4 2.5D Model

2.5D means semi-3D. Simply put, 2D is a flat graphic, such as a picture, without thickness and outline. 3D is a three-dimensional graphic with thickness that can be viewed from all angles, such as a physical model printed by 3D. 2.5D is between 2D and 3D. It adds some 3D elements but is not completely 3D.

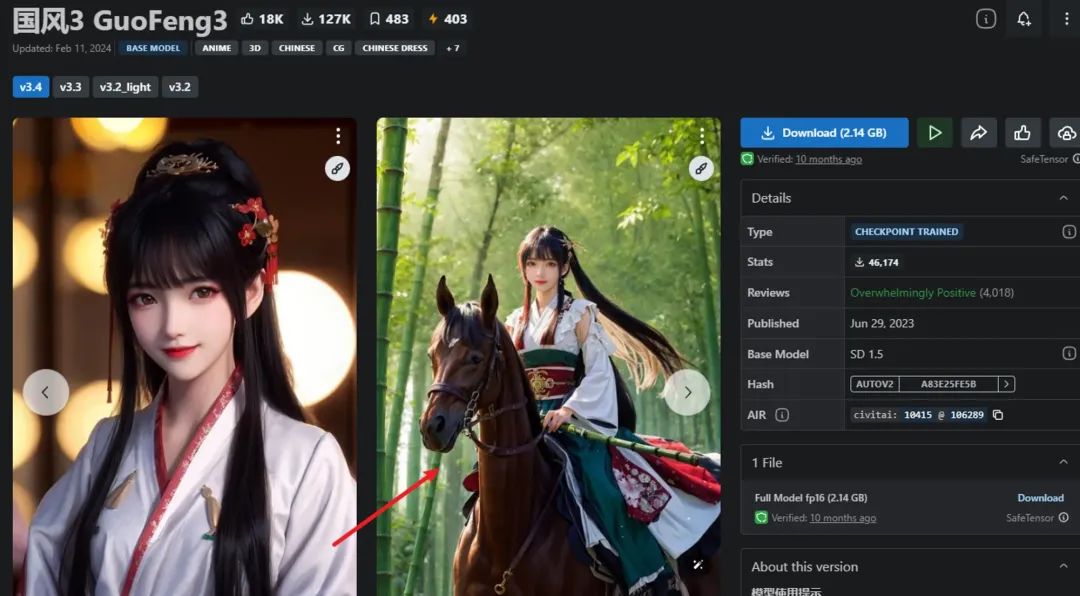

Some of the more famous 2.5D models include GuoFeng3 and others. Here are some of its renderings.

Finally, let me make a small summary. I believe that friends who have read this should more or less understand the complexity of the Stable Diffusion model. SD is not like midjourney and DALLE3, which are all models. It is more of a splicing combination of multiple models. Although it is more troublesome to master in the early stage, it is more suitable for friends who need customization and like DIY.