Last week,OpenAI Published GPT-4o model, the most exciting and up-to-date model in its GPT-n family of models developed over the years.

OpenAI's GPT-4o model surpasses its predecessors, the GPT-4 and GPT-4 Turbo models, in terms of versatility, performance, and responsiveness.

Additionally, the GPT-4o model can process audio or image input from the user and then quickly generate speech or text output. If you want to learn more about the GPT-4o model, you've come to the right place!

In this article, I will introduce the GPT-4o model in detail, are you ready?

What is GPT-4o?

GPT-4o is the latest AI model developed by OpenAI with innovative multimodal capabilities to process and understand information in multiple formats. Not only can it process text for conversation and authoring, GPT-4o can also analyze audio and images.

For example, it can listen to a song and analyze its emotion, or view a picture and describe the scene. This ability allows GPT-4o to not only understand literal messages, but also to capture subtle nuances in communication, making conversations more natural and engaging.

In addition, GPT-4o's multi-modal understanding greatly enhances information processing capabilities, enabling it to synthesize text, audio, and visual information for analysis, opening up the possibility of developing new applications such as artificial intelligence assistants, educational tools, and creative content generation.

This technological advancement is not just a breakthrough in the field of AI, but a major leap forward in the way AI interacts with and understands the world to a more human-like approach.

How does GPT-4o work?

OpenAI's GPT-4o model differs from GPT-4 in the way it handles audio, visual, or textual inputs.The GPT-4 model responds to textual inputs and audio outputs by using multiple neural networks and combining their outputs.

However, the GPT-4o model does all the work with just one neural network. In this way, GPT-4o understands the tones in the input, recognizes multiple speakers, understands background noise, and generates more natural and emotional responses.

How to use GPT-4o?

OpenAI has announced that the GPT-4o model is free and open to users in order to promote it globally. If you have an OpenAI account (if you don't have one yet, check out ChatGPT's detailed registration tutorial: 2024 ChatGPT Detailed Registration Tutorial, a fully illustrated version of the procedure!) If you have an OpenAI account (if you don't have one yet, please check the ChatGPT registration tutorial: 2024 ChatGPT registration tutorial, the full graphic version of the procedure!

However, the GPT-4o model has a limit on the number of times it can be used by free users. If you need to use the model frequently, consider upgrading to a Plus membership by paying $20 per month to get 5 times the number of uses as a free user.

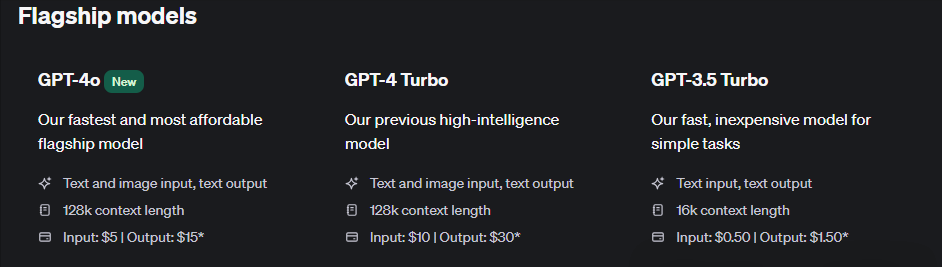

GPT-4o API Price

If you want to use the GPT-4o model as an API, you can do so for half the price of the GPT-4 Turbo model, which costs $5 per million input tokens and $15 per million output tokens.

GPT-4o Features

GPT-4o is OpenAI's newest and most advanced model that opens the door to a variety of exciting usage scenarios and new opportunities. This model has advanced multimodal capabilities and better performance than its predecessors. Let's take a closer look at GPT-4o's capabilities.

GPT-4o Performance

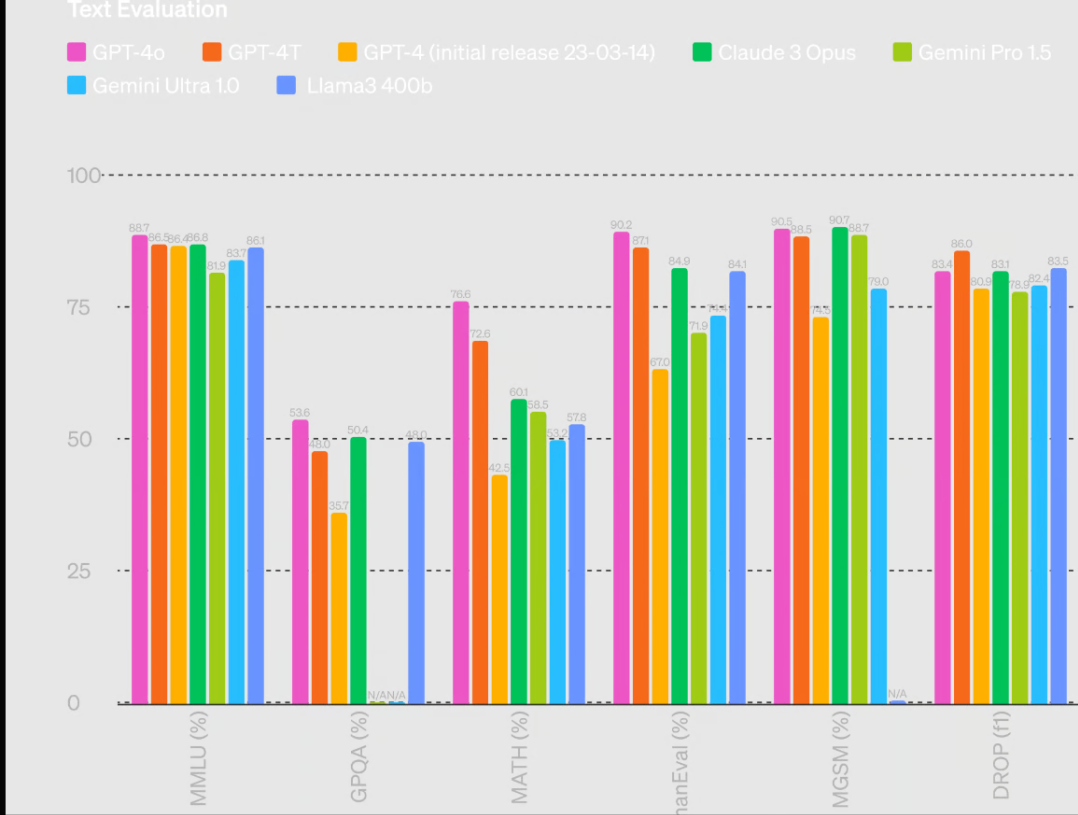

In various performance test comparisons, the GPT-4o outperformed its predecessor, the GPT-4, as well as the Claude 3 Opus and the Gemini Pro 1.5. Not only does it have a wide range of applications, but it also processes data and outputs results in real time, and scores highly for its text quality.

According to the OpenAI article, the GPT-4o scored a whopping 88.71 TP3T on the LLMU test, which measures language comprehension, while the GPT-4 and Claude 3 Opus scored 86.61 TP3T and 86.81 TP3T, respectively.

In addition, on the MATH test, which evaluates computational power, GPT-4o led the way with a high score of 76.6%, compared to scores of 53.6% and 90.2% on the GPQA and HumanEval, respectively.

visual comprehension

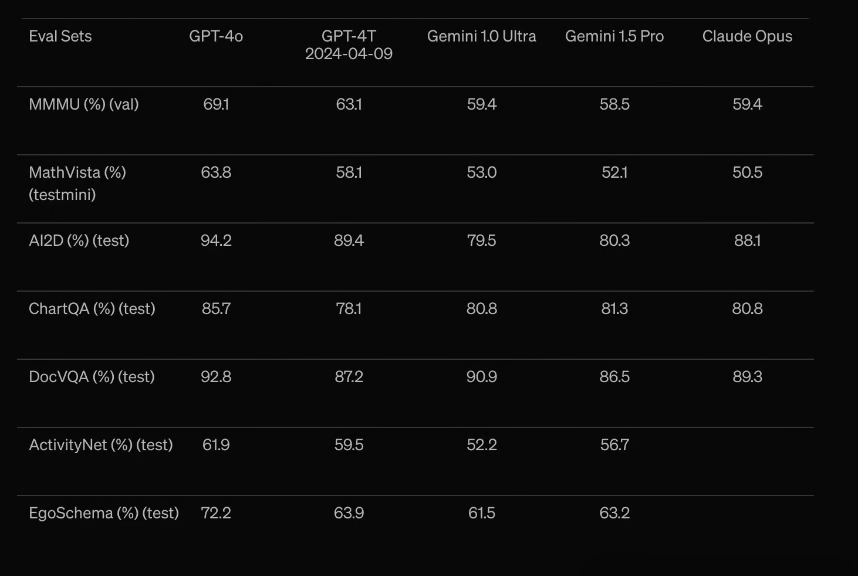

One of the most remarkable features of the GPT-4o model is its visual comprehension capabilities. It can analyze visual, video, and video call data in real time and generate human-like responses based on the analyzed results.

According to OpenAI, GPT-4o far outperforms other similar models and any of its previous versions in tests of understanding pictures, charts, and graphs.

In addition to this data, in practice, you can ask GPT-4o questions using real-time images. It not only understands your questions, but also quickly translates them into images and then gives concise, human-like answers.

Speech/audio processing

GPT-4o is also very strong in processing speech or audio, with a response time almost as fast as a human. While a typical human responds to a conversation in about 250 milliseconds, the GPT-4o takes about 320 milliseconds to analyze the speech and respond.

In contrast, the GPT-4 takes 5.4 seconds and the GPT-3.5 takes 2.8 seconds. The GPT-4o is much faster, and talking to the GPT-4o model is almost as smooth as talking to a real person.

While GPT-4o currently has fixed voices for each language, OpenAI has announced that it will be adding more kinds of voices in the coming weeks. Even so, GPT-4o's existing voices already sound very much like real people, expressing emotion, speaking with natural pauses, and speaking fluently.