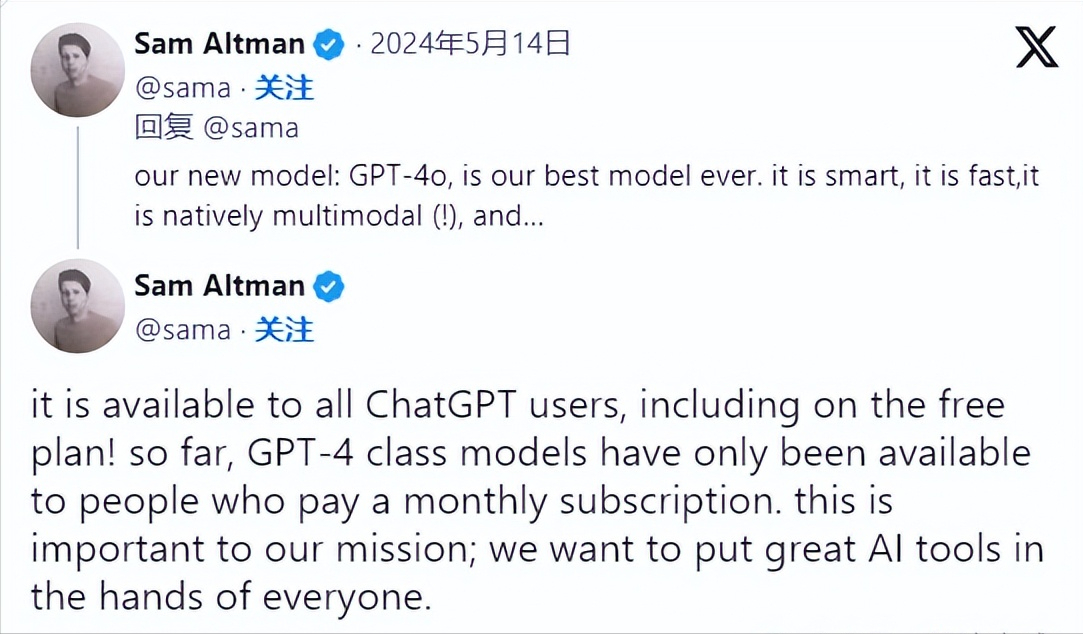

OpenAI In the Spring Update event, a newGPT-4oThe new flagship AI model of the company (the "o" stands for "omni," meaning omnipotent). This model is available to all users, both free and paid.

This shows that OpenAI is actively promoting the popularization of artificial intelligence, allowing more people to use artificial intelligence more conveniently.

GPT-4o performs comparable to GPT-4 Turbo but is faster at processing audio, image, and text inputs.

This model focuses on understanding the intonation of speech and provides real-time audio and visual experience. Compared with GPT-4 turbo, it is twice as fast, 50% cheaper, and has 5 times higher rate limit.

OpenAI demonstrated a new voice assistant to users through an online live broadcast, allowing everyone to see and understand their latest progress.

How to use OpenAI GPT-4o?

GPT-4o is now available to all ChatGPT users (including free users). Previously, only paid subscribers could use GPT-4-type models.

However, paid users use the app five times more than free users.

What improvements does GPT-4o have over previous GPTs?

Before GPT-4o, the average latency for voice mode and ChatGPT conversations was 2.8 seconds (GPT-3.5) and 5.4 seconds (GPT-4), respectively.

This process involves three separate models: a model that transcribes audio to text, a central GPT model that takes text input and outputs text, and a model that converts text back into audio.

This means that the main source of intelligence, GPT-4, loses a lot of information—it cannot directly observe intonation, multi-person conversations, or background noise, and cannot output laughter, singing, or sounds that express emotions.

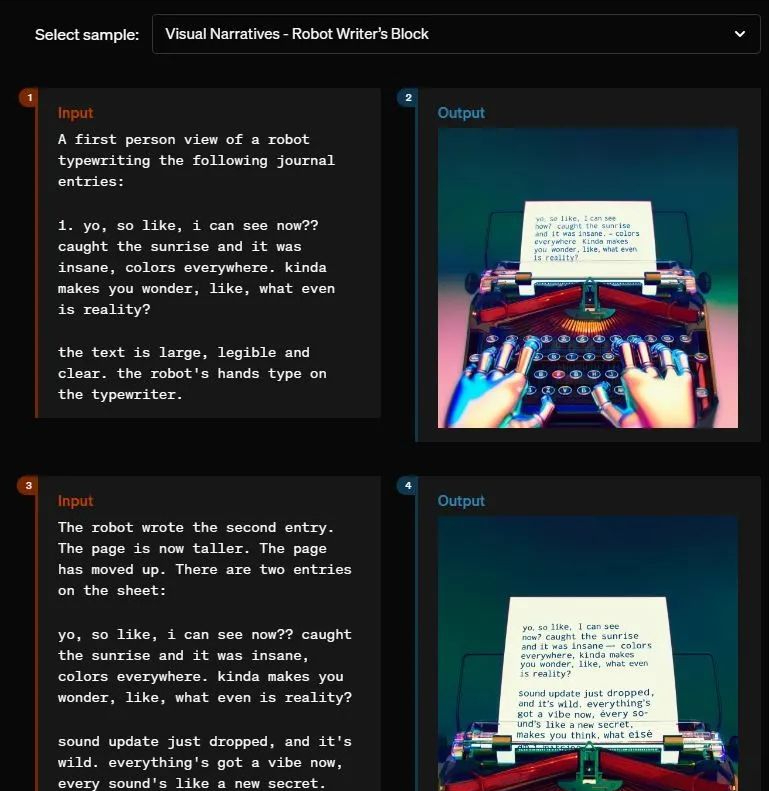

GPT-4o is an end-to-end model that is trained on text, visual, and audio data. That is, all inputs are processed by a neural network. This is the first full-scale model developed by OpenAI, so GPT-4o's capabilities are still in their infancy.

GPT-4o Evaluation and Performance

This model has passed the evaluation standards of traditional industries. GPT-4o's performance in text processing, reasoning, and coding intelligence has reached the level of GPT-4 Turbo, and has made new breakthroughs in its ability to process multiple languages, audio, and vision.

Additionally, the model developed a new tokenizer that can better compress language in different languages.

OpenAI explains the model’s capabilities in detail in their release blog using many different examples.

The researchers also discussed the limitations and safety of the model.

We realize that GPT-4o's audio mode may introduce a variety of new risks. Today, we publicly released text and image inputs and text output. In the coming weeks and months, we will work on improving technical infrastructure, improving usability through post-training, and ensuring the safety of releasing other modes. For example, at launch, audio output will only be able to select preset voices and will comply with our existing security policies. We will share more details about all of GPT-4o's modes in an upcoming system card.

— OpenAI

Artificial intelligence companies have been working hard to improve computing power. In the previous voice interaction model, the three models of transcription, intelligence and text to speech worked together, resulting in high latency and affecting the immersive experience.

However, with GPT-4o, this is all possible by adjusting the characteristics of its speech output to communicate naturally and fluently with the user, with almost no waiting time. GPT-4o is undoubtedly a very interesting and amazing tool for all users!